編輯:關於Android編程

Android源碼版本Version:4.2.2; 硬件平台 全志A31

前沿:

在前面的博文中,基本提到的是stagefright相關的控制流,具體分析了android架構中的MediaExtractor、AwesomePlayer、StagefrightPlayer、OMXCodec等的創建,底層OMXNodinstance實例的創建。分析了OMX最底層插件庫、編解碼器組件的架構以及如何創建屬於我們自己的OMX Plugin。

分析源碼架構的另一個關鍵是數據流的分析,從這裡開始,我們將對stagefright中的編解碼緩存區進行分析:

1.

回到OMXCodec的創建過程的源碼:

status_t AwesomePlayer::initVideoDecoder(uint32_t flags) {

.......

mVideoSource = OMXCodec::Create(

mClient.interface(), mVideoTrack->getFormat(),//提取視頻流的格式, mClient:BpOMX;mVideoTrack->getFormat()

false, // createEncoder,不創建編碼器false

mVideoTrack,

NULL, flags, USE_SURFACE_ALLOC ? mNativeWindow : NULL);//創建一個解碼器mVideoSource

if (mVideoSource != NULL) {

int64_t durationUs;

if (mVideoTrack->getFormat()->findInt64(kKeyDuration, &durationUs)) {

Mutex::Autolock autoLock(mMiscStateLock);

if (mDurationUs < 0 || durationUs > mDurationUs) {

mDurationUs = durationUs;

}

}

status_t err = mVideoSource->start();//啟動解碼器OMXCodec,完成解碼器的init初始化操作

.............

}

在Android4.2.2下Stagefright多媒體架構中的A31的OMX插件和Codec組件 博文我們對於OMXCodec::create已經做了詳細的分析,這裡來關注mVideoSource->start的相關功能,即OMXCodec::start的處理:

status_t OMXCodec::start(MetaData *meta) {

Mutex::Autolock autoLock(mLock);

........

return init();//進行初始化操作

}

這裡調用init()的過程,將會進行buffer的申請操作,為後續的流操作打下基礎:

status_t OMXCodec::init() {

// mLock is held.

.........

err = allocateBuffers();//緩存區的分配

if (err != (status_t)OK) {

return err;

}

if (mQuirks & kRequiresLoadedToIdleAfterAllocation) {

err = mOMX->sendCommand(mNode, OMX_CommandStateSet, OMX_StateIdle);

CHECK_EQ(err, (status_t)OK);

setState(LOADED_TO_IDLE);

}

............

}

我們來看allocateBuffers的實現

2.關注allocateBuffersOnPort的實現

status_t OMXCodec::allocateBuffers() {

status_t err = allocateBuffersOnPort(kPortIndexInput);//輸入緩存input口分配

if (err != OK) {

return err;

}

return allocateBuffersOnPort(kPortIndexOutput);//輸出緩存input口分配

}

這裡分別將對輸入和輸出口進行Buffer的申請與分配,對於解碼器,需要輸入口來存儲待解碼的數據源,需要將解碼後的數據源存儲到輸出口,而這也符合硬件的實現邏輯。以輸入緩存區分配為例展開分析:

status_t OMXCodec::allocateBuffersOnPort(OMX_U32 portIndex) {

.......

OMX_PARAM_PORTDEFINITIONTYPE def;

InitOMXParams(&def);

def.nPortIndex = portIndex;//輸入口

err = mOMX->getParameter(

mNode, OMX_IndexParamPortDefinition, &def, sizeof(def));//獲取輸入口參數到def

..........

err = mOMX->allocateBuffer(

mNode, portIndex, def.nBufferSize, &buffer,

&info.mData);

........

info.mBuffer = buffer;//獲取對應的buffer_id,有保存有底層的buffer的相關信息

info.mStatus = OWNED_BY_US;

info.mMem = mem;

info.mMediaBuffer = NULL;

...........

mPortBuffers[portIndex].push(info);//把當前的buffer恢復到mPortBuffers[2]中去

上述過程主要分為:

step1:先是獲取底層解碼器組件的當前的參數熟悉,一般這些參數都在建立OMX_Codec時完成的初始配置,前一博文中已經提到過。

step2:進行allocateBuffer的處理,這個函數的調用最終交給底層的OMX組件來完成,相關的實現將集成到A31的底層OMX編解碼組件的處理流中進行分析。

step3:完成對分配好的buffer信息info,維護在mPortBuffers[0]這個端口中。

上述過程完成了輸入與輸出的Buffer分配,為後續解碼操作buffer打下了基礎。

3.mediaplay啟動播放器

通過start的API調用,進入MediaplayerService::Client,再依次經過stagefrightplayer,AwesomePlayer。觸發play的videoevent的發生.

void AwesomePlayer::postVideoEvent_l(int64_t delayUs) {

ATRACE_CALL();

if (mVideoEventPending) {

return;

}

mVideoEventPending = true;

mQueue.postEventWithDelay(mVideoEvent, delayUs < 0 ? 10000 : delayUs);

}

根據前一博文的分析可知,該事件對應的處理函數為AwesomePlayer::onVideoEvent(),該部分代碼量較大,提取核心內容read的處理進行分析:

status_t err = mVideoSource->read(&mVideoBuffer, &options);//循環讀數據實際的OMX_CODEC::read,讀取到mVideoBuffer

read的核心是獲取可以用於render的視頻數據,這表明了read函數主要完成了從視頻源讀取元數據,並調用解碼器完成解碼生成可送顯的數據。

4. read函數的實現

可以想象read函數的應該是一個比較復雜的過程,我們從OMX_Codec的read函數入手來分析:

status_t OMXCodec::read(

MediaBuffer **buffer, const ReadOptions *options) {

status_t err = OK;

*buffer = NULL;

Mutex::Autolock autoLock(mLock);

drainInputBuffers();//buffer,填充數據源

if (mState == EXECUTING) {

// Otherwise mState == RECONFIGURING and this code will trigger

// after the output port is reenabled.

fillOutputBuffers();

}

}

...........

}

read的核心邏輯總結為drainInputBuffers()和fillOutputBuffers(),我們對其依次進行深入的分析

5. drainInputBuffers()讀取待解碼的視頻數據源到解碼器的Inport

這裡貼出其較為復雜的處理過程代碼,主要分為以下3個部分進行分析:

(1)

bool OMXCodec::drainInputBuffer(BufferInfo *info) {

if (mCodecSpecificDataIndex < mCodecSpecificData.size()) { CHECK(!(mFlags & kUseSecureInputBuffers)); const CodecSpecificData *specific = mCodecSpecificData[mCodecSpecificDataIndex]; size_t size = specific->mSize; if (!strcasecmp(MEDIA_MIMETYPE_VIDEO_AVC, mMIME) && !(mQuirks & kWantsNALFragments)) { static const uint8_t kNALStartCode[4] = { 0x00, 0x00, 0x00, 0x01 }; CHECK(info->mSize >= specific->mSize + 4); size += 4; memcpy(info->mData, kNALStartCode, 4); memcpy((uint8_t *)info->mData + 4, specific->mData, specific->mSize); } else { CHECK(info->mSize >= specific->mSize); memcpy(info->mData, specific->mData, specific->mSize);//copy前面的數據字段 } mNoMoreOutputData = false; CODEC_LOGV(calling emptyBuffer with codec specific data); status_t err = mOMX->emptyBuffer( mNode, info->mBuffer, 0, size, OMX_BUFFERFLAG_ENDOFFRAME | OMX_BUFFERFLAG_CODECCONFIG, 0);//處理buffer CHECK_EQ(err, (status_t)OK); info->mStatus = OWNED_BY_COMPONENT; ++mCodecSpecificDataIndex; return true; }

...............(1)這部分的內容主要是提取一部分解碼器字段,填充到info->mData的存儲空間中去。這部分主要基於視頻源的格式,如mp4等在創建OXMCodec病configureCodec時就完成了這個mCodecSpecificData字段的添加,應該些解碼需要的特殊字段吧。是否需要要看其視頻源的格式。獲取完這個字段信息後就是正式讀取視頻源的數據了。

(2)

for (;;) {

MediaBuffer *srcBuffer;

if (mSeekTimeUs >= 0) {

if (mLeftOverBuffer) {

mLeftOverBuffer->release();

mLeftOverBuffer = NULL;

}

MediaSource::ReadOptions options;

options.setSeekTo(mSeekTimeUs, mSeekMode);

mSeekTimeUs = -1;

mSeekMode = ReadOptions::SEEK_CLOSEST_SYNC;

mBufferFilled.signal();

err = mSource->read(&srcBuffer, &options);//讀取視頻源中的真實數據這裡是MPEG4Source的read

if (err == OK) {

int64_t targetTimeUs;

if (srcBuffer->meta_data()->findInt64(

kKeyTargetTime, &targetTimeUs)

&& targetTimeUs >= 0) {

CODEC_LOGV(targetTimeUs = %lld us, targetTimeUs);

mTargetTimeUs = targetTimeUs;

} else {

mTargetTimeUs = -1;

}

}

} else if (mLeftOverBuffer) {

srcBuffer = mLeftOverBuffer;

mLeftOverBuffer = NULL;

err = OK;

} else {

err = mSource->read(&srcBuffer);

}

if (err != OK) {

signalEOS = true;

mFinalStatus = err;

mSignalledEOS = true;

mBufferFilled.signal();

break;

}

if (mFlags & kUseSecureInputBuffers) {

info = findInputBufferByDataPointer(srcBuffer->data());

CHECK(info != NULL);

}

size_t remainingBytes = info->mSize - offset;//buffer中剩余的可以存儲視頻數據的空間

if (srcBuffer->range_length() > remainingBytes) {//當前讀取的數據已經達到解碼的數據量

if (offset == 0) {

CODEC_LOGE(

Codec's input buffers are too small to accomodate

buffer read from source (info->mSize = %d, srcLength = %d),

info->mSize, srcBuffer->range_length());

srcBuffer->release();

srcBuffer = NULL;

setState(ERROR);

return false;

}

mLeftOverBuffer = srcBuffer;//把沒讀取的buffer記錄下來

break;

}

bool releaseBuffer = true;

if (mFlags & kStoreMetaDataInVideoBuffers) {

releaseBuffer = false;

info->mMediaBuffer = srcBuffer;

}

if (mFlags & kUseSecureInputBuffers) {

// Data in info is already provided at this time.

releaseBuffer = false;

CHECK(info->mMediaBuffer == NULL);

info->mMediaBuffer = srcBuffer;

} else {

CHECK(srcBuffer->data() != NULL) ;

memcpy((uint8_t *)info->mData + offset,

(const uint8_t *)srcBuffer->data()

+ srcBuffer->range_offset(),

srcBuffer->range_length());//copy數據源數據到輸入緩存,數據容量srcBuffer->range_length()

}

int64_t lastBufferTimeUs;

CHECK(srcBuffer->meta_data()->findInt64(kKeyTime, &lastBufferTimeUs));

CHECK(lastBufferTimeUs >= 0);

if (mIsEncoder && mIsVideo) {

mDecodingTimeList.push_back(lastBufferTimeUs);

}

if (offset == 0) {

timestampUs = lastBufferTimeUs;

}

offset += srcBuffer->range_length();//增加偏移量

if (!strcasecmp(MEDIA_MIMETYPE_AUDIO_VORBIS, mMIME)) {

CHECK(!(mQuirks & kSupportsMultipleFramesPerInputBuffer));

CHECK_GE(info->mSize, offset + sizeof(int32_t));

int32_t numPageSamples;

if (!srcBuffer->meta_data()->findInt32(

kKeyValidSamples, &numPageSamples)) {

numPageSamples = -1;

}

memcpy((uint8_t *)info->mData + offset,

&numPageSamples,

sizeof(numPageSamples));

offset += sizeof(numPageSamples);

}

if (releaseBuffer) {

srcBuffer->release();

srcBuffer = NULL;

}

++n;

if (!(mQuirks & kSupportsMultipleFramesPerInputBuffer)) {

break;

}

int64_t coalescedDurationUs = lastBufferTimeUs - timestampUs;

if (coalescedDurationUs > 250000ll) {

// Don't coalesce more than 250ms worth of encoded data at once.

break;

}

}...........

該部分是提取視頻源數據的關鍵,主要通過 err = mSource->read(&srcBuffer, &options)來完成,mSource是在創建編解碼器傳入的,實際是一個對應於視頻源格式的一個解析器MediaExtractor。比如在建立MP4的解析器MPEG4Extractor,通過新建一個new MPEG4Source。故最終這裡調用的是MPEG4Source的read成員函數,其實際也維護著整個待解碼的原始視頻流。

我們可以看大在read函數後,會將待解碼的數據流以for循環依次讀入到底層的buffer空間中,只有當滿足當前讀取的原始數據片段比底層的input口的buffer剩余空間小srcBuffer->range_length() > remainingBytes,那就可以繼續讀取,否則直接break後,去進行下一步操作。或者如果一次待解碼的數據時張是大於250ms也直接跳出。

這處理體現了處理的高效性。最終視頻原始數據存儲在info->mData的底層輸入空間中。

(3)

err = mOMX->emptyBuffer(

mNode, info->mBuffer, 0, offset,

flags, timestampUs);

觸發底層的解碼器組件進行處理。這部分留在後續對A31的底層編解碼API操作時進行分析。

6.fillOutputBuffers對輸出buffer口的填充,即實現解碼過程:

void OMXCodec::fillOutputBuffers() {

CHECK_EQ((int)mState, (int)EXECUTING);

...........

Vector *buffers = &mPortBuffers[kPortIndexOutput];輸出端口

for (size_t i = 0; i < buffers->size(); ++i) {

BufferInfo *info = &buffers->editItemAt(i);

if (info->mStatus == OWNED_BY_US) {

fillOutputBuffer(&buffers->editItemAt(i));

}

}

}

void OMXCodec::fillOutputBuffer(BufferInfo *info) {

CHECK_EQ((int)info->mStatus, (int)OWNED_BY_US);

if (mNoMoreOutputData) {

CODEC_LOGV(There is no more output data available, not

calling fillOutputBuffer);

return;

}

CODEC_LOGV(Calling fillBuffer on buffer %p, info->mBuffer);

status_t err = mOMX->fillBuffer(mNode, info->mBuffer);

if (err != OK) {

CODEC_LOGE(fillBuffer failed w/ error 0x%08x, err);

setState(ERROR);

return;

}

info->mStatus = OWNED_BY_COMPONENT;

}

從上面的代碼看來,fillOutputBuffer的實現比drainInputBuffers簡單了很多。但相同的是,兩者最終都講控制權交給底層的解碼器來完成。

7.等待解碼數據被fill到outbuffer中,OMXCodecObserver完成回調處理

等待解碼完成的這部分內容在read函數中通過以下函數來實現:

while (mState != ERROR && !mNoMoreOutputData && mFilledBuffers.empty()) {

if ((err = waitForBufferFilled_l()) != OK) {//進入等待buffer被填充

return err;

}

}

上述表明,只要mFilledBuffers為空則進入等待填充pthread_cond_timedwait。而這個線程被喚醒是通過底層的組件回調來完成的,回調函數的注冊哎底層編解碼器Node完成的,實際最終的回調是交給OMXCodecObserver來完成的:

struct OMXCodecObserver : public BnOMXObserver {

OMXCodecObserver() {

}

void setCodec(const sp &target) {

mTarget = target;

}

// from IOMXObserver

virtual void onMessage(const omx_message &msg) {

sp codec = mTarget.promote();

if (codec.get() != NULL) {

Mutex::Autolock autoLock(codec->mLock);

codec->on_message(msg);//OMX_Codec的on_message處理

codec.clear();

}

}

最終可以看到是由OMX_Codec->on_message來進行消息的處理,這部分的內容主要包括EMPTY_BUFFER_DONE和FILL_BUFFER_DONE兩個message處理,對FILL_BUFFER_DONE完成後的消息回調進行分析:

void OMXCodec::on_message(const omx_message &msg) {

if (mState == ERROR) {

/*

* only drop EVENT messages, EBD and FBD are still

* processed for bookkeeping purposes

*/

if (msg.type == omx_message::EVENT) {

ALOGW(Dropping OMX EVENT message - we're in ERROR state.);

return;

}

}

switch (msg.type) { case omx_message::FILL_BUFFER_DONE://底層回調callback告知當前 ..............

mFilledBuffers.push_back(i);//當前的輸出buffer信息維護在mFilledBuffers

mBufferFilled.signal();//發出信息用於渲染

可以看到這裡對read線程進行了喚醒。

8.提取一個可用的解碼後的數據幀

size_t index = *mFilledBuffers.begin();

mFilledBuffers.erase(mFilledBuffers.begin());

BufferInfo *info = &mPortBuffers[kPortIndexOutput].editItemAt(index);//從獲取解碼後的視頻源

CHECK_EQ((int)info->mStatus, (int)OWNED_BY_US);

info->mStatus = OWNED_BY_CLIENT;

info->mMediaBuffer->add_ref();//

if (mSkipCutBuffer != NULL) {

mSkipCutBuffer->submit(info->mMediaBuffer);

}

*buffer = info->mMediaBuffer;

獲得了線程喚醒後的buffer,從這裡獲取到輸出端口對應的Bufferinfo,作為最終的BufferInfo信息返回給read函數

9

經過5、6、7、8的處理過程,read最終返回可用於顯示的mVideoBuffer,接下去就是如何送顯的過程了。可以看到下面的代碼,將會創建一個渲染器mVideoRenderer來完成這個解碼後視頻源的顯示:

if ((mNativeWindow != NULL) && (mVideoRendererIsPreview || mVideoRenderer == NULL)) {//首次創建渲染器 mVideoRendererIsPreview = false;

initRenderer_l();//初始化渲染器,新建一個AwesomeLocalRenderer }

if (mVideoRenderer != NULL) { mSinceLastDropped++; mVideoRenderer->render(mVideoBuffer);//啟動渲染,即顯示當前buffer if (!mVideoRenderingStarted) { mVideoRenderingStarted = true; notifyListener_l(MEDIA_INFO, MEDIA_INFO_RENDERING_START); }

}

void AwesomePlayer::initRenderer_l() {

ATRACE_CALL();

if (mNativeWindow == NULL) {

return;

}

sp meta = mVideoSource->getFormat();

int32_t format;

const char *component;

int32_t decodedWidth, decodedHeight;

CHECK(meta->findInt32(kKeyColorFormat, &format));

CHECK(meta->findCString(kKeyDecoderComponent, &component));

CHECK(meta->findInt32(kKeyWidth, &decodedWidth));

CHECK(meta->findInt32(kKeyHeight, &decodedHeight));

int32_t rotationDegrees;

if (!mVideoTrack->getFormat()->findInt32(

kKeyRotation, &rotationDegrees)) {

rotationDegrees = 0;

}

mVideoRenderer.clear();

// Must ensure that mVideoRenderer's destructor is actually executed

// before creating a new one.

IPCThreadState::self()->flushCommands();

// Even if set scaling mode fails, we will continue anyway

setVideoScalingMode_l(mVideoScalingMode);

if (USE_SURFACE_ALLOC

&& !strncmp(component, OMX., 4)

&& strncmp(component, OMX.google., 11)

&& strcmp(component, OMX.Nvidia.mpeg2v.decode)) {//使用硬件渲染器,除去上述的解碼器

// Hardware decoders avoid the CPU color conversion by decoding

// directly to ANativeBuffers, so we must use a renderer that

// just pushes those buffers to the ANativeWindow.

mVideoRenderer =

new AwesomeNativeWindowRenderer(mNativeWindow, rotationDegrees);//一般是使用硬件渲染機制

} else {

// Other decoders are instantiated locally and as a consequence

// allocate their buffers in local address space. This renderer

// then performs a color conversion and copy to get the data

// into the ANativeBuffer.

mVideoRenderer = new AwesomeLocalRenderer(mNativeWindow, meta);

}

}

可以看到這裡有2個渲染器的創建分支,OMX和OMX.google說明底層的解碼器用的是軟解碼,那麼他渲染器也使用所謂的本地渲染器實際是軟渲染器。故這裡我們使用的是AwesomeNativeWindowRenderer渲染器,其結構如下所述:

struct AwesomeNativeWindowRenderer : public AwesomeRenderer {

AwesomeNativeWindowRenderer(

const sp &nativeWindow,

int32_t rotationDegrees)

: mNativeWindow(nativeWindow) {

applyRotation(rotationDegrees);

}

virtual void render(MediaBuffer *buffer) {

ATRACE_CALL();

int64_t timeUs;

CHECK(buffer->meta_data()->findInt64(kKeyTime, &timeUs));

native_window_set_buffers_timestamp(mNativeWindow.get(), timeUs * 1000);

status_t err = mNativeWindow->queueBuffer(

mNativeWindow.get(), buffer->graphicBuffer().get(), -1);//直接使用queuebuffer進行渲染顯示

if (err != 0) {

ALOGE(queueBuffer failed with error %s (%d), strerror(-err),

-err);

return;

}

sp metaData = buffer->meta_data();

metaData->setInt32(kKeyRendered, 1);

}

不是很復雜,只是實現了AwesomeRenderer渲染接口render。最終調用這個函數來實現對buffer的顯示。這裡看到很熟悉的queueBuffer,大家可以回看我的博文Android4.2.2 SurfaceFlinger之圖形渲染queueBuffer實現和VSYNC的存在感 ,這是通過應用程序的本地窗口mNativeWindow(因為播放器videoview繼承了sufaceview,surfaceview類會創建一個本地的surface,其繼承了本地窗口類)將當前buffer提交給SurfaceFlinger服務進行顯示,具體內容不在展開。

至此我們完成了stagefright下的編解碼的數據流的相關操作,程序上復雜主要體現在emptybuffer和fillbuffer為主。當然由於能力有限,在很多細節上也沒有進行很詳細的分析,也希望大家多交流,多學習。

Android:優化內存

Android:優化內存

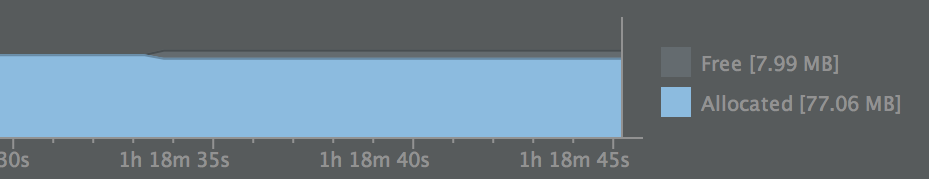

話說,從mta上報的數據上來看,我們的app出現了3起OOM(out of memery):java.lang.Throwable: java.lang.OutOfMem

Android兩個ListView共用一個萬能的BaseAdapter

Android兩個ListView共用一個萬能的BaseAdapter

升級之前的MyAdapter.javapackage run.yang.com.listviewactivedemo;import android.content.Con

Android HTTP網絡請求的異步實現

Android HTTP網絡請求的異步實現

前言大家都知道網絡操作的響應時間是不定的,所有的網絡操作都應該放在一個異步操作中處理,而且為了模塊解耦,我們希望網絡操作由專門的類來處理。所有網絡數據發送,數據接收都有某

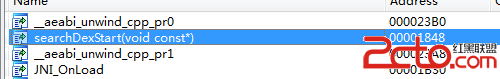

Android安卓破解之逆向分析SO常用的IDA分析技巧

Android安卓破解之逆向分析SO常用的IDA分析技巧

1、結構體的創建及導入,結構體指針等。以JniNativeInterface, DexHeader為例。解析Dex的函數如下: F5後如下:&nbs