編輯:關於Android編程

[cpp]

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <assert.h>

#include <android/log.h>

// for native audio

#include <SLES/OpenSLES.h>

#include <SLES/OpenSLES_Android.h>

#include "VideoPlayerDecode.h"

#include "../ffmpeg/libavutil/avutil.h"

#include "../ffmpeg/libavcodec/avcodec.h"

#include "../ffmpeg/libavformat/avformat.h"

#define LOGI(...) ((void)__android_log_print(ANDROID_LOG_INFO, "graduation", __VA_ARGS__))

AVFormatContext *pFormatCtx = NULL;

int audioStream, delay_time, videoFlag = 0;

AVCodecContext *aCodecCtx;

AVCodec *aCodec;

AVFrame *aFrame;

AVPacket packet;

int frameFinished = 0;

// engine interfaces

static SLObjectItf engineObject = NULL;

static SLEngineItf engineEngine;

// output mix interfaces

static SLObjectItf outputMixObject = NULL;

static SLEnvironmentalReverbItf outputMixEnvironmentalReverb = NULL;

// buffer queue player interfaces

static SLObjectItf bqPlayerObject = NULL;

static SLPlayItf bqPlayerPlay;

static SLAndroidSimpleBufferQueueItf bqPlayerBufferQueue;

static SLEffectSendItf bqPlayerEffectSend;

static SLMuteSoloItf bqPlayerMuteSolo;

static SLVolumeItf bqPlayerVolume;

// aux effect on the output mix, used by the buffer queue player

static const SLEnvironmentalReverbSettings reverbSettings =

SL_I3DL2_ENVIRONMENT_PRESET_STONECORRIDOR;

// file descriptor player interfaces

static SLObjectItf fdPlayerObject = NULL;

static SLPlayItf fdPlayerPlay;

static SLSeekItf fdPlayerSeek;

static SLMuteSoloItf fdPlayerMuteSolo;

static SLVolumeItf fdPlayerVolume;

// pointer and size of the next player buffer to enqueue, and number of remaining buffers

static short *nextBuffer;

static unsigned nextSize;

static int nextCount;

// this callback handler is called every time a buffer finishes playing

void bqPlayerCallback(SLAndroidSimpleBufferQueueItf bq, void *context)

{

assert(bq == bqPlayerBufferQueue);

assert(NULL == context);

// for streaming playback, replace this test by logic to find and fill the next buffer

if (--nextCount > 0 && NULL != nextBuffer && 0 != nextSize) {

SLresult result;

// enqueue another buffer

result = (*bqPlayerBufferQueue)->Enqueue(bqPlayerBufferQueue, nextBuffer, nextSize);

// the most likely other result is SL_RESULT_BUFFER_INSUFFICIENT,

// which for this code example would indicate a programming error

assert(SL_RESULT_SUCCESS == result);

}

}

void createEngine(JNIEnv* env, jclass clazz)

{

SLresult result;

// create engine

result = slCreateEngine(&engineObject, 0, NULL, 0, NULL, NULL);

assert(SL_RESULT_SUCCESS == result);

// realize the engine

result = (*engineObject)->Realize(engineObject, SL_BOOLEAN_FALSE);

assert(SL_RESULT_SUCCESS == result);

// get the engine interface, which is needed in order to create other objects

result = (*engineObject)->GetInterface(engineObject, SL_IID_ENGINE, &engineEngine);

assert(SL_RESULT_SUCCESS == result);

// create output mix, with environmental reverb specified as a non-required interface

const SLInterfaceID ids[1] = {SL_IID_ENVIRONMENTALREVERB};

const SLboolean req[1] = {SL_BOOLEAN_FALSE};

result = (*engineEngine)->CreateOutputMix(engineEngine, &outputMixObject, 1, ids, req);

assert(SL_RESULT_SUCCESS == result);

// realize the output mix

result = (*outputMixObject)->Realize(outputMixObject, SL_BOOLEAN_FALSE);

assert(SL_RESULT_SUCCESS == result);

// get the environmental reverb interface

// this could fail if the environmental reverb effect is not available,

// either because the feature is not present, excessive CPU load, or

// the required MODIFY_AUDIO_SETTINGS permission was not requested and granted

result = (*outputMixObject)->GetInterface(outputMixObject, SL_IID_ENVIRONMENTALREVERB,

&outputMixEnvironmentalReverb);

if (SL_RESULT_SUCCESS == result) {

result = (*outputMixEnvironmentalReverb)->SetEnvironmentalReverbProperties(

outputMixEnvironmentalReverb, &reverbSettings);

}

// ignore unsuccessful result codes for environmental reverb, as it is optional for this example

}

void createBufferQueueAudioPlayer(JNIEnv* env, jclass clazz, int rate, int channel,int bitsPerSample)

{

SLresult result;

// configure audio source

SLDataLocator_AndroidSimpleBufferQueue loc_bufq = {SL_DATALOCATOR_ANDROIDSIMPLEBUFFERQUEUE, 2};

// SLDataFormat_PCM format_pcm = {SL_DATAFORMAT_PCM, 2, SL_SAMPLINGRATE_16,

// SL_PCMSAMPLEFORMAT_FIXED_16, SL_PCMSAMPLEFORMAT_FIXED_16,

// SL_SPEAKER_FRONT_LEFT | SL_SPEAKER_FRONT_RIGHT, SL_BYTEORDER_LITTLEENDIAN};

SLDataFormat_PCM format_pcm;

format_pcm.formatType = SL_DATAFORMAT_PCM;

format_pcm.numChannels = channel;

format_pcm.samplesPerSec = rate * 1000;

format_pcm.bitsPerSample = bitsPerSample;

format_pcm.containerSize = 16;

if(channel == 2)

format_pcm.channelMask = SL_SPEAKER_FRONT_LEFT | SL_SPEAKER_FRONT_RIGHT;

else

format_pcm.channelMask = SL_SPEAKER_FRONT_CENTER;

format_pcm.endianness = SL_BYTEORDER_LITTLEENDIAN;

SLDataSource audioSrc = {&loc_bufq, &format_pcm};

// configure audio sink

SLDataLocator_OutputMix loc_outmix = {SL_DATALOCATOR_OUTPUTMIX, outputMixObject};

SLDataSink audioSnk = {&loc_outmix, NULL};

// create audio player

const SLInterfaceID ids[3] = {SL_IID_BUFFERQUEUE, SL_IID_EFFECTSEND,

/*SL_IID_MUTESOLO,*/ SL_IID_VOLUME};

const SLboolean req[3] = {SL_BOOLEAN_TRUE, SL_BOOLEAN_TRUE,

/*SL_BOOLEAN_TRUE,*/ SL_BOOLEAN_TRUE};

result = (*engineEngine)->CreateAudioPlayer(engineEngine, &bqPlayerObject, &audioSrc, &audioSnk,

3, ids, req);

assert(SL_RESULT_SUCCESS == result);

// realize the player

result = (*bqPlayerObject)->Realize(bqPlayerObject, SL_BOOLEAN_FALSE);

assert(SL_RESULT_SUCCESS == result);

// get the play interface

result = (*bqPlayerObject)->GetInterface(bqPlayerObject, SL_IID_PLAY, &bqPlayerPlay);

assert(SL_RESULT_SUCCESS == result);

// get the buffer queue interface

result = (*bqPlayerObject)->GetInterface(bqPlayerObject, SL_IID_BUFFERQUEUE,

&bqPlayerBufferQueue);

assert(SL_RESULT_SUCCESS == result);

// register callback on the buffer queue

result = (*bqPlayerBufferQueue)->RegisterCallback(bqPlayerBufferQueue, bqPlayerCallback, NULL);

assert(SL_RESULT_SUCCESS == result);

// get the effect send interface

result = (*bqPlayerObject)->GetInterface(bqPlayerObject, SL_IID_EFFECTSEND,

&bqPlayerEffectSend);

assert(SL_RESULT_SUCCESS == result);

#if 0 // mute/solo is not supported for sources that are known to be mono, as this is

// get the mute/solo interface

result = (*bqPlayerObject)->GetInterface(bqPlayerObject, SL_IID_MUTESOLO, &bqPlayerMuteSolo);

assert(SL_RESULT_SUCCESS == result);

#endif

// get the volume interface

result = (*bqPlayerObject)->GetInterface(bqPlayerObject, SL_IID_VOLUME, &bqPlayerVolume);

assert(SL_RESULT_SUCCESS == result);

// set the player's state to playing

result = (*bqPlayerPlay)->SetPlayState(bqPlayerPlay, SL_PLAYSTATE_PLAYING);

assert(SL_RESULT_SUCCESS == result);

}

void AudioWrite(const void*buffer, int size)

{

(*bqPlayerBufferQueue)->Enqueue(bqPlayerBufferQueue, buffer, size);

}

JNIEXPORT jint JNICALL Java_com_zhangjie_graduation_videopalyer_jni_VideoPlayerDecode_VideoPlayer

(JNIEnv *env, jclass clz, jstring fileName)

{

const char* local_title = (*env)->GetStringUTFChars(env, fileName, NULL);

av_register_all();//注冊所有支持的文件格式以及編解碼器

/*

*只讀取文件頭,並不會填充流信息

*/

if(avformat_open_input(&pFormatCtx, local_title, NULL, NULL) != 0)

return -1;

/*

*獲取文件中的流信息,此函數會讀取packet,並確定文件中所有流信息,

*設置pFormatCtx->streams指向文件中的流,但此函數並不會改變文件指針,

*讀取的packet會給後面的解碼進行處理。

*/

if(avformat_find_stream_info(pFormatCtx, NULL) < 0)

return -1;

/*

*輸出文件的信息,也就是我們在使用ffmpeg時能夠看到的文件詳細信息,

*第二個參數指定輸出哪條流的信息,-1代表ffmpeg自己選擇。最後一個參數用於

*指定dump的是不是輸出文件,我們的dump是輸入文件,因此一定要為0

*/

av_dump_format(pFormatCtx, -1, local_title, 0);

int i = 0;

for(i=0; i< pFormatCtx->nb_streams; i++)

{

if(pFormatCtx->streams[i]->codec->codec_type == AVMEDIA_TYPE_AUDIO){

audioStream = i;

break;

}

}

if(audioStream < 0)return -1;

aCodecCtx = pFormatCtx->streams[audioStream]->codec;

aCodec = avcodec_find_decoder(aCodecCtx->codec_id);

if(avcodec_open2(aCodecCtx, aCodec, NULL) < 0)return -1;

aFrame = avcodec_alloc_frame();

if(aFrame == NULL)return -1;

int ret;

createEngine(env, clz);

int flag_start = 0;

while(videoFlag != -1)

{

if(av_read_frame(pFormatCtx, &packet) < 0)break;

if(packet.stream_index == audioStream)

{

ret = avcodec_decode_audio4(aCodecCtx, aFrame, &frameFinished, &packet);

if(ret > 0 && frameFinished)

{

if(flag_start == 0)

{

flag_start = 1;

createBufferQueueAudioPlayer(env, clz, aCodecCtx->sample_rate, aCodecCtx->channels, SL_PCMSAMPLEFORMAT_FIXED_16);

}

int data_size = av_samples_get_buffer_size(

aFrame->linesize,aCodecCtx->channels,

aFrame->nb_samples,aCodecCtx->sample_fmt, 1);

LOGI("audioDecodec :%d : %d, :%d :%d",data_size,aCodecCtx->channels,aFrame->nb_samples,aCodecCtx->sample_rate);

(*bqPlayerBufferQueue)->Enqueue(bqPlayerBufferQueue, aFrame->data[0], data_size);

}

}

usleep(5000);

while(videoFlag != 0)

{

if(videoFlag == 1)//暫停

{

sleep(1);

}else if(videoFlag == -1) //停止

{

break;

}

}

av_free_packet(&packet);

}

av_free(aFrame);

avcodec_close(aCodecCtx);

avformat_close_input(&pFormatCtx);

(*env)->ReleaseStringUTFChars(env, fileName, local_title);

}

JNIEXPORT jint JNICALL Java_com_zhangjie_graduation_videopalyer_jni_VideoPlayerDecode_VideoPlayerPauseOrPlay

(JNIEnv *env, jclass clz)

{

if(videoFlag == 1)

{

videoFlag = 0;

}else if(videoFlag == 0){

videoFlag = 1;

}

return videoFlag;

}

JNIEXPORT jint JNICALL Java_com_zhangjie_graduation_videopalyer_jni_VideoPlayerDecode_VideoPlayerStop

(JNIEnv *env, jclass clz)

{

videoFlag = -1;

}

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <assert.h>

#include <android/log.h>

// for native audio

#include <SLES/OpenSLES.h>

#include <SLES/OpenSLES_Android.h>

#include "VideoPlayerDecode.h"

#include "../ffmpeg/libavutil/avutil.h"

#include "../ffmpeg/libavcodec/avcodec.h"

#include "../ffmpeg/libavformat/avformat.h"

#define LOGI(...) ((void)__android_log_print(ANDROID_LOG_INFO, "graduation", __VA_ARGS__))

AVFormatContext *pFormatCtx = NULL;

int audioStream, delay_time, videoFlag = 0;

AVCodecContext *aCodecCtx;

AVCodec *aCodec;

AVFrame *aFrame;

AVPacket packet;

int frameFinished = 0;

// engine interfaces

static SLObjectItf engineObject = NULL;

static SLEngineItf engineEngine;

// output mix interfaces

static SLObjectItf outputMixObject = NULL;

static SLEnvironmentalReverbItf outputMixEnvironmentalReverb = NULL;

// buffer queue player interfaces

static SLObjectItf bqPlayerObject = NULL;

static SLPlayItf bqPlayerPlay;

static SLAndroidSimpleBufferQueueItf bqPlayerBufferQueue;

static SLEffectSendItf bqPlayerEffectSend;

static SLMuteSoloItf bqPlayerMuteSolo;

static SLVolumeItf bqPlayerVolume;

// aux effect on the output mix, used by the buffer queue player

static const SLEnvironmentalReverbSettings reverbSettings =

SL_I3DL2_ENVIRONMENT_PRESET_STONECORRIDOR;

// file descriptor player interfaces

static SLObjectItf fdPlayerObject = NULL;

static SLPlayItf fdPlayerPlay;

static SLSeekItf fdPlayerSeek;

static SLMuteSoloItf fdPlayerMuteSolo;

static SLVolumeItf fdPlayerVolume;

// pointer and size of the next player buffer to enqueue, and number of remaining buffers

static short *nextBuffer;

static unsigned nextSize;

static int nextCount;

// this callback handler is called every time a buffer finishes playing

void bqPlayerCallback(SLAndroidSimpleBufferQueueItf bq, void *context)

{

assert(bq == bqPlayerBufferQueue);

assert(NULL == context);

// for streaming playback, replace this test by logic to find and fill the next buffer

if (--nextCount > 0 && NULL != nextBuffer && 0 != nextSize) {

SLresult result;

// enqueue another buffer

result = (*bqPlayerBufferQueue)->Enqueue(bqPlayerBufferQueue, nextBuffer, nextSize);

// the most likely other result is SL_RESULT_BUFFER_INSUFFICIENT,

// which for this code example would indicate a programming error

assert(SL_RESULT_SUCCESS == result);

}

}

void createEngine(JNIEnv* env, jclass clazz)

{

SLresult result;

// create engine

result = slCreateEngine(&engineObject, 0, NULL, 0, NULL, NULL);

assert(SL_RESULT_SUCCESS == result);

// realize the engine

result = (*engineObject)->Realize(engineObject, SL_BOOLEAN_FALSE);

assert(SL_RESULT_SUCCESS == result);

// get the engine interface, which is needed in order to create other objects

result = (*engineObject)->GetInterface(engineObject, SL_IID_ENGINE, &engineEngine);

assert(SL_RESULT_SUCCESS == result);

// create output mix, with environmental reverb specified as a non-required interface

const SLInterfaceID ids[1] = {SL_IID_ENVIRONMENTALREVERB};

const SLboolean req[1] = {SL_BOOLEAN_FALSE};

result = (*engineEngine)->CreateOutputMix(engineEngine, &outputMixObject, 1, ids, req);

assert(SL_RESULT_SUCCESS == result);

// realize the output mix

result = (*outputMixObject)->Realize(outputMixObject, SL_BOOLEAN_FALSE);

assert(SL_RESULT_SUCCESS == result);

// get the environmental reverb interface

// this could fail if the environmental reverb effect is not available,

// either because the feature is not present, excessive CPU load, or

// the required MODIFY_AUDIO_SETTINGS permission was not requested and granted

result = (*outputMixObject)->GetInterface(outputMixObject, SL_IID_ENVIRONMENTALREVERB,

&outputMixEnvironmentalReverb);

if (SL_RESULT_SUCCESS == result) {

result = (*outputMixEnvironmentalReverb)->SetEnvironmentalReverbProperties(

outputMixEnvironmentalReverb, &reverbSettings);

}

// ignore unsuccessful result codes for environmental reverb, as it is optional for this example

}

void createBufferQueueAudioPlayer(JNIEnv* env, jclass clazz, int rate, int channel,int bitsPerSample)

{

SLresult result;

// configure audio source

SLDataLocator_AndroidSimpleBufferQueue loc_bufq = {SL_DATALOCATOR_ANDROIDSIMPLEBUFFERQUEUE, 2};

// SLDataFormat_PCM format_pcm = {SL_DATAFORMAT_PCM, 2, SL_SAMPLINGRATE_16,

// SL_PCMSAMPLEFORMAT_FIXED_16, SL_PCMSAMPLEFORMAT_FIXED_16,

// SL_SPEAKER_FRONT_LEFT | SL_SPEAKER_FRONT_RIGHT, SL_BYTEORDER_LITTLEENDIAN};

SLDataFormat_PCM format_pcm;

format_pcm.formatType = SL_DATAFORMAT_PCM;

format_pcm.numChannels = channel;

format_pcm.samplesPerSec = rate * 1000;

format_pcm.bitsPerSample = bitsPerSample;

format_pcm.containerSize = 16;

if(channel == 2)

format_pcm.channelMask = SL_SPEAKER_FRONT_LEFT | SL_SPEAKER_FRONT_RIGHT;

else

format_pcm.channelMask = SL_SPEAKER_FRONT_CENTER;

format_pcm.endianness = SL_BYTEORDER_LITTLEENDIAN;

SLDataSource audioSrc = {&loc_bufq, &format_pcm};

// configure audio sink

SLDataLocator_OutputMix loc_outmix = {SL_DATALOCATOR_OUTPUTMIX, outputMixObject};

SLDataSink audioSnk = {&loc_outmix, NULL};

// create audio player

const SLInterfaceID ids[3] = {SL_IID_BUFFERQUEUE, SL_IID_EFFECTSEND,

/*SL_IID_MUTESOLO,*/ SL_IID_VOLUME};

const SLboolean req[3] = {SL_BOOLEAN_TRUE, SL_BOOLEAN_TRUE,

/*SL_BOOLEAN_TRUE,*/ SL_BOOLEAN_TRUE};

result = (*engineEngine)->CreateAudioPlayer(engineEngine, &bqPlayerObject, &audioSrc, &audioSnk,

3, ids, req);

assert(SL_RESULT_SUCCESS == result);

// realize the player

result = (*bqPlayerObject)->Realize(bqPlayerObject, SL_BOOLEAN_FALSE);

assert(SL_RESULT_SUCCESS == result);

// get the play interface

result = (*bqPlayerObject)->GetInterface(bqPlayerObject, SL_IID_PLAY, &bqPlayerPlay);

assert(SL_RESULT_SUCCESS == result);

// get the buffer queue interface

result = (*bqPlayerObject)->GetInterface(bqPlayerObject, SL_IID_BUFFERQUEUE,

&bqPlayerBufferQueue);

assert(SL_RESULT_SUCCESS == result);

// register callback on the buffer queue

result = (*bqPlayerBufferQueue)->RegisterCallback(bqPlayerBufferQueue, bqPlayerCallback, NULL);

assert(SL_RESULT_SUCCESS == result);

// get the effect send interface

result = (*bqPlayerObject)->GetInterface(bqPlayerObject, SL_IID_EFFECTSEND,

&bqPlayerEffectSend);

assert(SL_RESULT_SUCCESS == result);

#if 0 // mute/solo is not supported for sources that are known to be mono, as this is

// get the mute/solo interface

result = (*bqPlayerObject)->GetInterface(bqPlayerObject, SL_IID_MUTESOLO, &bqPlayerMuteSolo);

assert(SL_RESULT_SUCCESS == result);

#endif

// get the volume interface

result = (*bqPlayerObject)->GetInterface(bqPlayerObject, SL_IID_VOLUME, &bqPlayerVolume);

assert(SL_RESULT_SUCCESS == result);

// set the player's state to playing

result = (*bqPlayerPlay)->SetPlayState(bqPlayerPlay, SL_PLAYSTATE_PLAYING);

assert(SL_RESULT_SUCCESS == result);

}

void AudioWrite(const void*buffer, int size)

{

(*bqPlayerBufferQueue)->Enqueue(bqPlayerBufferQueue, buffer, size);

}

JNIEXPORT jint JNICALL Java_com_zhangjie_graduation_videopalyer_jni_VideoPlayerDecode_VideoPlayer

(JNIEnv *env, jclass clz, jstring fileName)

{

const char* local_title = (*env)->GetStringUTFChars(env, fileName, NULL);

av_register_all();//注冊所有支持的文件格式以及編解碼器

/*

*只讀取文件頭,並不會填充流信息

*/

if(avformat_open_input(&pFormatCtx, local_title, NULL, NULL) != 0)

return -1;

/*

*獲取文件中的流信息,此函數會讀取packet,並確定文件中所有流信息,

*設置pFormatCtx->streams指向文件中的流,但此函數並不會改變文件指針,

*讀取的packet會給後面的解碼進行處理。

*/

if(avformat_find_stream_info(pFormatCtx, NULL) < 0)

return -1;

/*

*輸出文件的信息,也就是我們在使用ffmpeg時能夠看到的文件詳細信息,

*第二個參數指定輸出哪條流的信息,-1代表ffmpeg自己選擇。最後一個參數用於

*指定dump的是不是輸出文件,我們的dump是輸入文件,因此一定要為0

*/

av_dump_format(pFormatCtx, -1, local_title, 0);

int i = 0;

for(i=0; i< pFormatCtx->nb_streams; i++)

{

if(pFormatCtx->streams[i]->codec->codec_type == AVMEDIA_TYPE_AUDIO){

audioStream = i;

break;

}

}

if(audioStream < 0)return -1;

aCodecCtx = pFormatCtx->streams[audioStream]->codec;

aCodec = avcodec_find_decoder(aCodecCtx->codec_id);

if(avcodec_open2(aCodecCtx, aCodec, NULL) < 0)return -1;

aFrame = avcodec_alloc_frame();

if(aFrame == NULL)return -1;

int ret;

createEngine(env, clz);

int flag_start = 0;

while(videoFlag != -1)

{

if(av_read_frame(pFormatCtx, &packet) < 0)break;

if(packet.stream_index == audioStream)

{

ret = avcodec_decode_audio4(aCodecCtx, aFrame, &frameFinished, &packet);

if(ret > 0 && frameFinished)

{

if(flag_start == 0)

{

flag_start = 1;

createBufferQueueAudioPlayer(env, clz, aCodecCtx->sample_rate, aCodecCtx->channels, SL_PCMSAMPLEFORMAT_FIXED_16);

}

int data_size = av_samples_get_buffer_size(

aFrame->linesize,aCodecCtx->channels,

aFrame->nb_samples,aCodecCtx->sample_fmt, 1);

LOGI("audioDecodec :%d : %d, :%d :%d",data_size,aCodecCtx->channels,aFrame->nb_samples,aCodecCtx->sample_rate);

(*bqPlayerBufferQueue)->Enqueue(bqPlayerBufferQueue, aFrame->data[0], data_size);

}

}

usleep(5000);

while(videoFlag != 0)

{

if(videoFlag == 1)//暫停

{

sleep(1);

}else if(videoFlag == -1) //停止

{

break;

}

}

av_free_packet(&packet);

}

av_free(aFrame);

avcodec_close(aCodecCtx);

avformat_close_input(&pFormatCtx);

(*env)->ReleaseStringUTFChars(env, fileName, local_title);

}

JNIEXPORT jint JNICALL Java_com_zhangjie_graduation_videopalyer_jni_VideoPlayerDecode_VideoPlayerPauseOrPlay

(JNIEnv *env, jclass clz)

{

if(videoFlag == 1)

{

videoFlag = 0;

}else if(videoFlag == 0){

videoFlag = 1;

}

return videoFlag;

}

JNIEXPORT jint JNICALL Java_com_zhangjie_graduation_videopalyer_jni_VideoPlayerDecode_VideoPlayerStop

(JNIEnv *env, jclass clz)

{

videoFlag = -1;

}

然後就是需要在Android.mk中添加OpenSL ES的庫支持,代碼如下:

[cpp]

LOCAL_PATH := $(call my-dir)

#######################################################

########## ffmpeg-prebuilt #######

#######################################################

#declare the prebuilt library

include $(CLEAR_VARS)

LOCAL_MODULE := ffmpeg-prebuilt

LOCAL_SRC_FILES := ffmpeg/android/armv7-a/libffmpeg-neon.so

LOCAL_EXPORT_C_INCLUDES := ffmpeg/android/armv7-a/include

LOCAL_EXPORT_LDLIBS := ffmpeg/android/armv7-a/libffmpeg-neon.so

LOCAL_PRELINK_MODULE := true

include $(PREBUILT_SHARED_LIBRARY)

########################################################

## ffmpeg-test-neno.so ########

########################################################

include $(CLEAR_VARS)

TARGET_ARCH_ABI=armeabi-v7a

LOCAL_ARM_MODE=arm

LOCAL_ARM_NEON=true

LOCAL_ALLOW_UNDEFINED_SYMBOLS=false

LOCAL_MODULE := ffmpeg-test-neon

#LOCAL_SRC_FILES := jniffmpeg/VideoPlayerDecode.c

LOCAL_SRC_FILES := jniffmpeg/Decodec_Audio.c

LOCAL_C_INCLUDES := $(LOCAL_PATH)/ffmpeg/android/armv7-a/include \

$(LOCAL_PATH)/ffmpeg \

$(LOCAL_PATH)/ffmpeg/libavutil \

$(LOCAL_PATH)/ffmpeg/libavcodec \

$(LOCAL_PATH)/ffmpeg/libavformat \

$(LOCAL_PATH)/ffmpeg/libavcodec \

$(LOCAL_PATH)/ffmpeg/libswscale \

$(LOCAL_PATH)/jniffmpeg \

$(LOCAL_PATH)

LOCAL_SHARED_LIBRARY := ffmpeg-prebuilt

LOCAL_LDLIBS := -llog -lGLESv2 -ljnigraphics -lz -lm $(LOCAL_PATH)/ffmpeg/android/armv7-a/libffmpeg-neon.so

LOCAL_LDLIBS += -lOpenSLES

include $(BUILD_SHARED_LIBRARY)

LOCAL_PATH := $(call my-dir)

#######################################################

########## ffmpeg-prebuilt #######

#######################################################

#declare the prebuilt library

include $(CLEAR_VARS)

LOCAL_MODULE := ffmpeg-prebuilt

LOCAL_SRC_FILES := ffmpeg/android/armv7-a/libffmpeg-neon.so

LOCAL_EXPORT_C_INCLUDES := ffmpeg/android/armv7-a/include

LOCAL_EXPORT_LDLIBS := ffmpeg/android/armv7-a/libffmpeg-neon.so

LOCAL_PRELINK_MODULE := true

include $(PREBUILT_SHARED_LIBRARY)

########################################################

## ffmpeg-test-neno.so ########

########################################################

include $(CLEAR_VARS)

TARGET_ARCH_ABI=armeabi-v7a

LOCAL_ARM_MODE=arm

LOCAL_ARM_NEON=true

LOCAL_ALLOW_UNDEFINED_SYMBOLS=false

LOCAL_MODULE := ffmpeg-test-neon

#LOCAL_SRC_FILES := jniffmpeg/VideoPlayerDecode.c

LOCAL_SRC_FILES := jniffmpeg/Decodec_Audio.c

LOCAL_C_INCLUDES := $(LOCAL_PATH)/ffmpeg/android/armv7-a/include \

$(LOCAL_PATH)/ffmpeg \

$(LOCAL_PATH)/ffmpeg/libavutil \

$(LOCAL_PATH)/ffmpeg/libavcodec \

$(LOCAL_PATH)/ffmpeg/libavformat \

$(LOCAL_PATH)/ffmpeg/libavcodec \

$(LOCAL_PATH)/ffmpeg/libswscale \

$(LOCAL_PATH)/jniffmpeg \

$(LOCAL_PATH)

LOCAL_SHARED_LIBRARY := ffmpeg-prebuilt

LOCAL_LDLIBS := -llog -lGLESv2 -ljnigraphics -lz -lm $(LOCAL_PATH)/ffmpeg/android/armv7-a/libffmpeg-neon.so

LOCAL_LDLIBS += -lOpenSLES

include $(BUILD_SHARED_LIBRARY)

由於OpenSLES最低版本需要9所以要在Application.mk中添加平台

[cpp]

# The ARMv7 is significanly faster due to the use of the hardware FPU

APP_ABI := armeabi

APP_PLATFORM := android-9

APP_STL := stlport_static

APP_CPPFLAGS += -fno-rtti

#APP_ABI := armeabi

# The ARMv7 is significanly faster due to the use of the hardware FPU

APP_ABI := armeabi

APP_PLATFORM := android-9

APP_STL := stlport_static

APP_CPPFLAGS += -fno-rtti

#APP_ABI := armeabi

最後在終端運行ndk-build,就會將代碼添加到[cpp] view plaincopyprint?ffmpeg-test-neon.so這個庫中

ffmpeg-test-neon.so這個庫中

最後在Android端調用

[cpp]

VideoPlayer這個函數就會自動播放視頻的聲音,測試發現雖然聲音正常但是有雜音,可能采樣率設置的不對,獲取其他的配置有問題,下一章著重解決這個問題,同時使用隊列的方式來從視頻中取音頻包,然後從音頻包隊列中取出,然後解碼播放。

VideoPlayer這個函數就會自動播放視頻的聲音,測試發現雖然聲音正常但是有雜音,可能采樣率設置的不對,獲取其他的配置有問題,下一章著重解決這個問題,同時使用隊列的方式來從視頻中取音頻包,然後從音頻包隊列中取出,然後解碼播放。

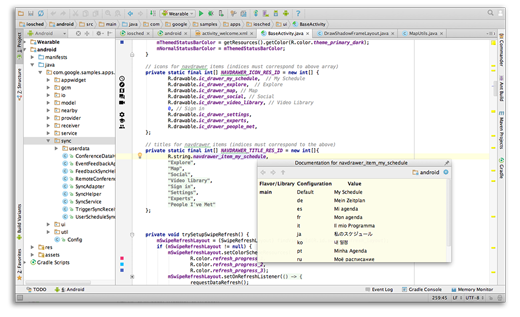

Android Studio重構之路

Android Studio重構之路

Android Studio,自Google2013年發布以來,就倍受Android開發者的喜愛,我們本書,就是基於Android Studio來進行案例演示的,大家都

Android 使用Path實現塗鴉功能

Android 使用Path實現塗鴉功能

今天實現一個塗鴉效果,會分幾步實現,這裡有一個重要的知識點就是圖層,要理解這個,不然你看這篇博客,很迷茫,迷茫的蒼茫的天涯是我的愛,先從簡單的需求做起,繪制一條線,代碼如

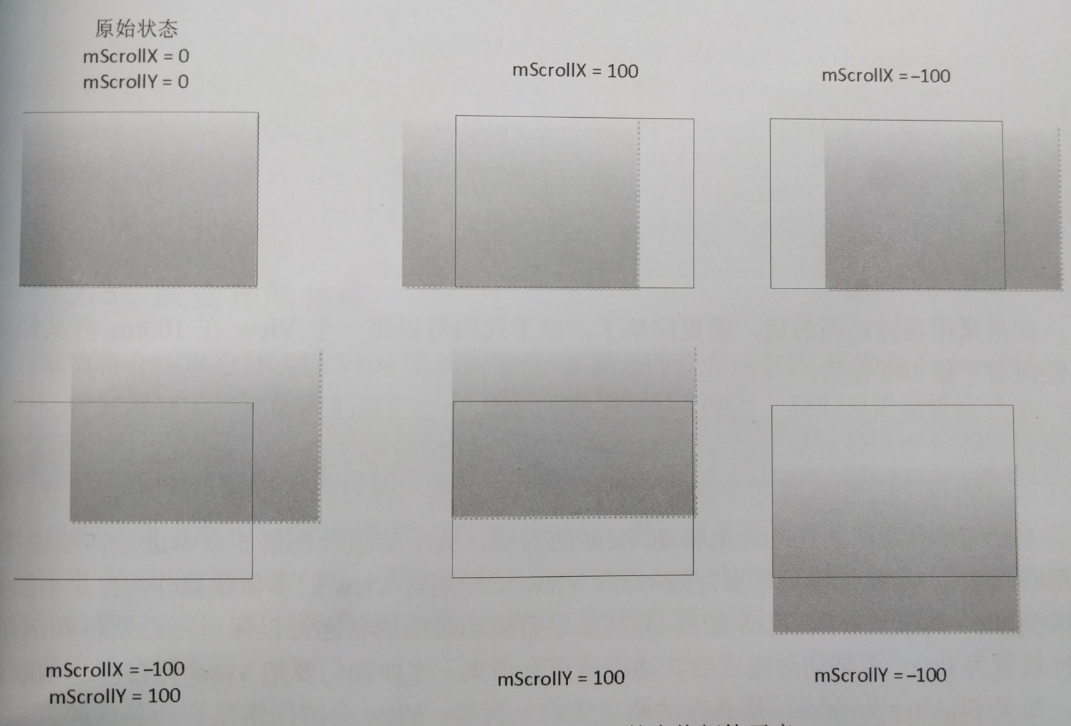

scrollTo + Scroller + ViewDragHelper

scrollTo + Scroller + ViewDragHelper

看標題就知道這篇文章講的主要是view滑動的相關內容。 ScrollTo && ScrollBy 先看下源碼: public void sc

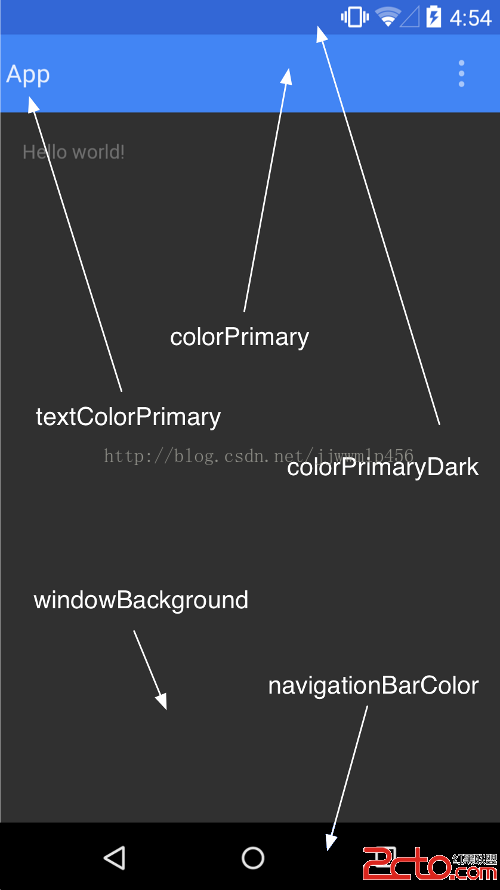

Android(Lollipop/5.0) Material Design(三) 使用Material主題

Android(Lollipop/5.0) Material Design(三) 使用Material主題

官網地址:https://developer.android.com/intl/zh-tw/training/material/theme.html 新的Material