編輯:關於android開發

PostgreSQL的流復制為HA提供了很好的支持,但是部署HA集群還需要專門的HA組件, 比如通用的Pacemaker+Corosync。pgpool作為PostgreSQL的中間件,也提供HA功能。

pgpool可以監視後端PostgreSQL的健康並實施failover,由於應用的所有流量都經過pgpool,可以很容易對故障節點進行隔離, 但,同時必須為pgpool配置備機,防止pgpool本身成為單點。pgpool自身帶watchdog組件通過quorum機制防止腦裂, 因此建議pgpool節點至少是3個,並且是奇數。在失去quorum後watchdog會自動摘除VIP,並阻塞客戶端連接。

下面利用pgpool搭建3節點PostgreSQL流復制的HA集群。 集群的目標為強數據一致HA,實現思路如下:

3個節點配置好主機名解析(/etc/hosts)

將pgsql_primary解析為主節點的IP

[postgres@node3 ~]$ cat /etc/hosts127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4::1 localhost localhost.localdomain localhost6 localhost6.localdomain6192.168.0.211 node1192.168.0.212 node2192.168.0.213 node3192.168.0.211 pgsql_primary

3個節點事先裝好PostgreSQL,並配置1主2從同步流復制,node1是主節點。

在2個Slave節點node2和node3上設置recovery.conf中的復制源的主機名為pgsql_primary

[postgres@node3 ~]$ cat /data/postgresql/data/recovery.conf standby_mode = 'on'primary_conninfo = 'host=pgsql_primary port=5433 application_name=node3 user=replication password=replication keepalives_idle=60 keepalives_interval=5 keepalives_count=5'restore_command = ''recovery_target_timeline = 'latest'

yum install http://www.pgpool.net/yum/rpms/3.5/redhat/rhel-7-x86_64/pgpool-II-release-3.5-1.noarch.rpmyum install pgpool-II-pg95 pgpool-II-pg95-extensions

[postgres@node1 ~]$ psql template1 -p5433 psql (9.5.2)Type "help" for help.template1=# CREATE EXTENSION pgpool_recovery;

pgpool_recovery擴展定義了4個函數用於遠程控制PG,這樣可以避免了對ssh的依賴,不過下面的步驟沒有用到這些函數。

template1=> \dx+ pgpool_recovery Objects in extension "pgpool_recovery" Object Description ----------------------------------------------- function pgpool_pgctl(text,text) function pgpool_recovery(text,text,text) function pgpool_recovery(text,text,text,text) function pgpool_remote_start(text,text) function pgpool_switch_xlog(text)(5 rows)

以下是node3上的配置,node1和node2節點上參照設置

$ cp /etc/pgpool-II/pgpool.conf.sample-stream /etc/pgpool-II/pgpool.conf$ vi /etc/pgpool-II/pgpool.conflisten_addresses = '*'port = 9999pcp_listen_addresses = '*'pcp_port = 9898backend_hostname0 = 'node1'backend_port0 = 5433backend_weight0 = 1backend_data_directory0 = '/data/postgresql/data'backend_flag0 = 'ALLOW_TO_FAILOVER'backend_hostname1 = 'node2'backend_port1 = 5433backend_weight1 = 1backend_data_directory1 = '/data/postgresql/data'backend_flag1 = 'ALLOW_TO_FAILOVER'backend_hostname2 = 'node3'backend_port2 = 5433backend_weight2 = 1backend_data_directory2 = '/data/postgresql/data'backend_flag2 = 'ALLOW_TO_FAILOVER'enable_pool_hba = offpool_passwd = 'pool_passwd'pid_file_name = '/var/run/pgpool/pgpool.pid'logdir = '/var/log/pgpool'connection_cache = onreplication_mode = offload_balance_mode = onmaster_slave_mode = onmaster_slave_sub_mode = 'stream'sr_check_period = 10sr_check_user = 'admin'sr_check_password = 'admin'sr_check_database = 'postgres'delay_threshold = 10000000follow_master_command = ''health_check_period = 3health_check_timeout = 20health_check_user = 'admin'health_check_password = 'admin'health_check_database = 'postgres'health_check_max_retries = 0health_check_retry_delay = 1connect_timeout = 10000failover_command = '/home/postgres/failover.sh %h %H %d %P'failback_command = ''fail_over_on_backend_error = onsearch_primary_node_timeout = 10use_watchdog = onwd_hostname = 'node3' ##設置本節點的節點名wd_port = 9000wd_priority = 1wd_authkey = ''wd_ipc_socket_dir = '/tmp'delegate_IP = '192.168.0.220'if_cmd_path = '/usr/sbin'if_up_cmd = 'ip addr add $_IP_$/24 dev eno16777736 label eno16777736:0'if_down_cmd = 'ip addr del $_IP_$/24 dev eno16777736'arping_path = '/usr/sbin'arping_cmd = 'arping -U $_IP_$ -w 1 -I eno16777736'wd_monitoring_interfaces_list = ''wd_lifecheck_method = 'heartbeat'wd_interval = 10wd_heartbeat_port = 9694wd_heartbeat_keepalive = 2wd_heartbeat_deadtime = 30heartbeat_destination0 = 'node1' ##設置其它PostgreSQL節點的節點名heartbeat_destination_port0 = 9694heartbeat_device0 = 'eno16777736'heartbeat_destination1 = 'node2' ##設置其它PostgreSQL節點的節點名heartbeat_destination_port1 = 9694heartbeat_device1 = 'eno16777736'other_pgpool_hostname0 = 'node1' ##設置其它pgpool節點的節點名other_pgpool_port0 = 9999other_wd_port0 = 9000other_pgpool_hostname0 = 'node2' ##設置其它pgpool節點的節點名other_pgpool_port0 = 9999other_wd_port0 = 9000

pgpool-II 有一個用於管理功能的接口,用於通過網絡獲取數據庫節點信息、關閉 pgpool-II 等。要使用 PCP 命令,必須進行用戶認證。這需要在 pcp.conf 文件中定義一個用戶和密碼。

$ pg_md5 pgpoolba777e4c2f15c11ea8ac3be7e0440aa0$ vi /etc/pgpool-II/pcp.confroot:ba777e4c2f15c11ea8ac3be7e0440aa0

為了免去每次執行pcp命令都輸入密碼的麻煩,可以配置免密碼文件。

$ vi ~/.pcppasslocalhost:9898:root:pgpool$ chmod 0600 ~/.pcppass

pgpool可以按照和PostgreSQL的hba.conf類似的方式配置自己的主機認證,所有連接到pgpool上的客戶端連接將接受認證,這解決了後端PostgreSQL無法直接對前端主機進行IP地址限制的問題。

開啟pgpool的hba認證

$ vi /etc/pgpool-II/pgpool.confenable_pool_hba = on

編輯pool_hba.conf,注意客戶端的認證請求最終還是要被pgpool轉發到後端的PostgreSQL上去,所以pool_hba.conf上的配置應和後端的hba.conf一致,比如pgpool對客戶端的連接采用md5認證,那麼PostgreSQL對這個pgpool轉發的連接也要采用md5認證,並且密碼相同。

$ vi /etc/pgpool-II/pool_hba.conf

如果pgpool使用了md5認證,需要在pgpool上設置密碼文件。

密碼文件名通過pgpool.conf中的pool_passwd參數設置,默認為/etc/pgpool-II/pool_passwd

設置pool_passwd的方法如下。

$ pg_md5 -m -u admin admin

分別在3個節點上啟動pgpool。

[root@node3 ~]# service pgpool startRedirecting to /bin/systemctl start pgpool.service

檢查pgpool日志輸出,確認啟動成功。

[root@node3 ~]# tail /var/log/messagesNov 8 12:53:47 node3 pgpool: 2016-11-08 12:53:47: pid 31078: LOG: pgpool-II successfully started. version 3.5.4 (ekieboshi)

通過pcp_watchdog_info命令確認集群狀況

[root@node3 ~]# pcp_watchdog_info -w -vWatchdog Cluster Information Total Nodes : 3Remote Nodes : 2Quorum state : QUORUM EXISTAlive Remote Nodes : 2VIP up on local node : NOMaster Node Name : Linux_node2_9999Master Host Name : node2Watchdog Node Information Node Name : Linux_node3_9999Host Name : node3Delegate IP : 192.168.0.220Pgpool port : 9999Watchdog port : 9000Node priority : 1Status : 7Status Name : STANDBYNode Name : Linux_node1_9999Host Name : node1Delegate IP : 192.168.0.220Pgpool port : 9999Watchdog port : 9000Node priority : 1Status : 7Status Name : STANDBYNode Name : Linux_node2_9999Host Name : node2Delegate IP : 192.168.0.220Pgpool port : 9999Watchdog port : 9000Node priority : 1Status : 4Status Name : MASTER

通過psql命令確認集群狀況

[root@node3 ~]# psql -hnode3 -p9999 -U admin postgres...postgres=> show pool_nodes; node_id | hostname | port | status | lb_weight | role | select_cnt ---------+----------+------+--------+-----------+---------+------------ 0 | node1 | 5433 | 2 | 0.333333 | standby | 0 1 | node2 | 5433 | 2 | 0.333333 | standby | 0 2 | node3 | 5433 | 2 | 0.333333 | primary | 0(3 rows)

准備failover腳本,並部署在3個節點上

#!/bin/bashpgsql_nodes="node1 node2 node3"logfile=/var/log/pgpool/failover.logdown_node=$1new_master=$2down_node_id=$3old_master_id=$4old_master=$down_nodeexport PGDATA="/data/postgresql/data"export PGPORT=5433export PGDATABASE=postgresexport PGUSER=adminexport PGPASSWORD=admintrigger_command="pg_ctl -D $PGDATA promote -m fast"stop_command="pg_ctl -D $PGDATA stop -m fast"start_command="pg_ctl -D $PGDATA start"restart_command="pg_ctl -D $PGDATA restart -m fast"CHECK_XLOG_LOC_SQL="select pg_last_xlog_replay_location(),pg_last_xlog_receive_location()"log(){ echo "$*" >&2 echo "`date +'%Y-%m-%d %H:%M:%S'` $*" >> $logfile}# Execulte SQL and return the result.exec_sql() { local host="$1" local sql="$2" local output local rc output=`psql -h $host -Atc "$sql"` rc=$? echo $output return $rc}get_xlog_location() { local rc local output local replay_loc local receive_loc local output1 local output2 local log1 local log2 local newer_location local target_host=$1 output=`exec_sql "$target_host" "$CHECK_XLOG_LOC_SQL"` rc=$? if [ $rc -ne 0 ]; then log "Can't get xlog location from $target_host.(rc=$rc)" exit 1 fi replay_loc=`echo $output | cut -d "|" -f 1` receive_loc=`echo $output | cut -d "|" -f 2` output1=`echo "$replay_loc" | cut -d "/" -f 1` output2=`echo "$replay_loc" | cut -d "/" -f 2` log1=`printf "%08s\n" $output1 | sed "s/ /0/g"` log2=`printf "%08s\n" $output2 | sed "s/ /0/g"` replay_loc="${log1}${log2}" output1=`echo "$receive_loc" | cut -d "/" -f 1` output2=`echo "$receive_loc" | cut -d "/" -f 2` log1=`printf "%08s\n" $output1 | sed "s/ /0/g"` log2=`printf "%08s\n" $output2 | sed "s/ /0/g"` receive_loc="${log1}${log2}" newer_location=`printf "$replay_loc\n$receive_loc" | sort -r | head -1` echo "$newer_location" return 0}get_newer_location(){ local newer_location newer_location=`printf "$1\n$2" | sort -r | head -1` echo "$newer_location"}log "##########failover start:$0 $*"# if standby down do nothingif [ "X$down_node_id" != "X$old_master_id" ]; then log "standby node '$down_node' down,skip" exitfi# check the old_master deadlog "check the old_master '$old_master' dead ..."exec_sql $old_master "select 1" >/dev/null 2>&1if [ $? -eq 0 ]; then log "the old master $old_master is alive, cancel faiover" exit 1fi# check all nodes other than the old master alive and is standbylog "check all nodes '$pgsql_nodes' other than the old master alive and is standby ..."for host in $pgsql_nodes ; do if [ $host != $old_master ]; then is_in_recovery=`exec_sql $host "select pg_is_in_recovery()"` if [ $? -ne 0 ]; then log "failed to check $host" exit 1 fi if [ "$is_in_recovery" != 't' ];then log "$host is not a valid standby(is_in_recovery=$is_in_recovery)" exit fi fidone# find the node with the newer xloglog "find the node with the newer xlog ..."# TODO wait for all xlog replayednewer_location=$(get_xlog_location $new_master)log "$new_master : $newer_location"new_primary=$new_masterfor host in $pgsql_nodes ; do if [ $host != $new_primary -a $host != $old_master ]; then location=$(get_xlog_location $host) log "$host : $location" if [ "$newer_location" != "$(get_newer_location $location $newer_location)" ]; then newer_location=$location new_primary=$host log "change new primary to $new_primary" fi fidone# change replication source to the new primary in all standbysfor host in $pgsql_nodes ; do if [ $host != $new_primary -a $host != $old_master ]; then log "change replication source to $new_primary in $host ..." output=`ssh -T $host "/home/postgres/change_replication_source.sh $new_primary" 2>&1` rc=$? log "$output" if [ $rc -ne 0 ]; then log "failed to change replication source to $new_primary in $host" exit 1 fi fidone# trigger failoverlog "trigger failover to '$new_primary' ..."ssh -T $new_primary su - postgres -c "'$trigger_command'"rc=$?log "fire promote '$new_primary' to be the new primary (rc=$rc)"exit $rc #!/bin/bashnew_primary=$1cat /etc/hosts | grep -v ' pgsql_primary$' >/tmp/hosts.tmpecho "`resolveip -s $new_primary` pgsql_primary" >>/tmp/hosts.tmpcp -f /tmp/hosts.tmp /etc/hostsrm -f /tmp/hosts.tmp

添加2個腳本的執行權限

[postgres@node1 ~]# chmod +x /home/postgres/failover.sh /home/postgres/change_replication_source.sh

注:以上腳本並不十分嚴謹,僅供參考。

故障發生前的集群狀態

[root@node3 ~]# psql -h192.168.0.220 -p9999 -U admin postgresPassword for user admin: psql (9.5.2)Type "help" for help.postgres=> show pool_nodes; node_id | hostname | port | status | lb_weight | role | select_cnt ---------+----------+------+--------+-----------+---------+------------ 0 | node1 | 5433 | 2 | 0.333333 | primary | 3 1 | node2 | 5433 | 2 | 0.333333 | standby | 0 2 | node3 | 5433 | 2 | 0.333333 | standby | 0(3 rows)postgres=> select inet_server_addr(); inet_server_addr ------------------ 192.168.0.211(1 row

殺死主節點的postgres進程

[root@node1 ~]# killall -9 postgres

檢查集群狀態,已經切換到node2

postgres=> show pool_nodes;FATAL: unable to read data from DB node 0DETAIL: EOF encountered with backendserver closed the connection unexpectedly This probably means the server terminated abnormally before or while processing the request.The connection to the server was lost. Attempting reset: Succeeded.postgres=> show pool_nodes; node_id | hostname | port | status | lb_weight | role | select_cnt ---------+----------+------+--------+-----------+---------+------------ 0 | node1 | 5433 | 3 | 0.333333 | standby | 27 1 | node2 | 5433 | 2 | 0.333333 | primary | 11 2 | node3 | 5433 | 2 | 0.333333 | standby | 0(3 rows)postgres=> select inet_server_addr(); inet_server_addr ------------------ 192.168.0.212(1 row)

恢復node1為新主的Slave

修改pgsql_primary的名稱解析為新主的ip

vi /etc/hosts...192.168.0.212 pgsql_primary

從新主上拉備份恢復

su - postgrescp /data/postgresql/data/recovery.done /tmp/rm -rf /data/postgresql/datapg_basebackup -hpgsql_primary -p5433 -Ureplication -D /data/postgresql/data -X stream -Pcp /tmp/recovery.done /data/postgresql/data/recovery.confpg_ctl -D /data/postgresql/data startexit

將node1加入集群

pcp_attach_node -w 0

確認集群狀態

postgres=> show pool_nodes; node_id | hostname | port | status | lb_weight | role | select_cnt ---------+----------+------+--------+-----------+---------+------------ 0 | node1 | 5433 | 1 | 0.333333 | standby | 27 1 | node2 | 5433 | 2 | 0.333333 | primary | 24 2 | node3 | 5433 | 2 | 0.333333 | standby | 0(3 rows)

地址被占用pgpool啟動失敗

Nov 15 02:33:56 node3 pgpool: 2016-11-15 02:33:56: pid 3868: FATAL: failed to bind a socket: "/tmp/.s.PGSQL.9999"Nov 15 02:33:56 node3 pgpool: 2016-11-15 02:33:56: pid 3868: DETAIL: bind socket failed with error: "Address already in use"

由於上次沒有正常關閉導致,處理方法:

rm -f /tmp/.s.PGSQL.9999

pgpool的master斷網後,連接阻塞

切換pgpool的master節點(node1)的網絡後,通過pgpool的連接阻塞,剩余節點的pgpool重新協商出新的Master,但阻塞繼續,包括新建連接,也沒有發生切換。

pgpool的日志裡不斷輸出下面的消息

Nov 15 23:12:37 node3 pgpool: 2016-11-15 23:12:37: pid 4088: ERROR: Failed to check replication time lagNov 15 23:12:37 node3 pgpool: 2016-11-15 23:12:37: pid 4088: DETAIL: No persistent db connection for the node 0Nov 15 23:12:37 node3 pgpool: 2016-11-15 23:12:37: pid 4088: HINT: check sr_check_user and sr_check_passwordNov 15 23:12:37 node3 pgpool: 2016-11-15 23:12:37: pid 4088: CONTEXT: while checking replication time lagNov 15 23:12:39 node3 pgpool: 2016-11-15 23:12:39: pid 4088: LOG: failed to connect to PostgreSQL server on "node1:5433", getsockopt() detected error "No route to host"Nov 15 23:12:39 node3 pgpool: 2016-11-15 23:12:39: pid 4088: ERROR: failed to make persistent db connectionNov 15 23:12:39 node3 pgpool: 2016-11-15 23:12:39: pid 4088: DETAIL: connection to host:"node1:5433" failed

node2和node3已經協商出新主,但連接阻塞狀態一直繼續,除非解禁舊master的網卡。

[root@node3 ~]# pcp_watchdog_info -w -vWatchdog Cluster Information Total Nodes : 3Remote Nodes : 2Quorum state : QUORUM EXISTAlive Remote Nodes : 2VIP up on local node : YESMaster Node Name : Linux_node3_9999Master Host Name : node3Watchdog Node Information Node Name : Linux_node3_9999Host Name : node3Delegate IP : 192.168.0.220Pgpool port : 9999Watchdog port : 9000Node priority : 1Status : 4Status Name : MASTERNode Name : Linux_node1_9999Host Name : node1Delegate IP : 192.168.0.220Pgpool port : 9999Watchdog port : 9000Node priority : 1Status : 8Status Name : LOSTNode Name : Linux_node2_9999Host Name : node2Delegate IP : 192.168.0.220Pgpool port : 9999Watchdog port : 9000Node priority : 1Status : 7Status Name : STANDBY

根據下面的堆棧,是pgpool通過watchdog將某個後端降級時,阻塞了。這應該是一個bug。

[root@node3 ~]# ps -ef|grep pgpool.confroot 4048 1 0 Nov15 ? 00:00:00 /usr/bin/pgpool -f /etc/pgpool-II/pgpool.conf -nroot 5301 4832 0 00:10 pts/3 00:00:00 grep --color=auto pgpool.conf[root@node3 ~]# pstack 4048#0 0x00007f73647e98d3 in __select_nocancel () from /lib64/libc.so.6#1 0x0000000000493d2e in issue_command_to_watchdog ()#2 0x0000000000494ac3 in wd_degenerate_backend_set ()#3 0x000000000040bcf3 in degenerate_backend_set_ex ()#4 0x000000000040e1c4 in PgpoolMain ()#5 0x0000000000406ec2 in main ()

本次1主2從的架構中,用pgpool實施PostgreSQL的HA,效果並不理想。與pgpool和pgsql部署在一起有關,靠譜的做法是把pgpool部署在單獨的節點或和應用服務器部署在一起。

Android自定義控件系列案例【四】

Android自定義控件系列案例【四】

Android自定義控件系列案例【四】 案例效果: 模擬器上運行有些鋸齒,真機上和預期一樣好 案例分析: 看效果,第一直覺肯定是Android原生態控件中沒有這樣的控

Android性能優化之Splash頁應該這樣設計

Android性能優化之Splash頁應該這樣設計

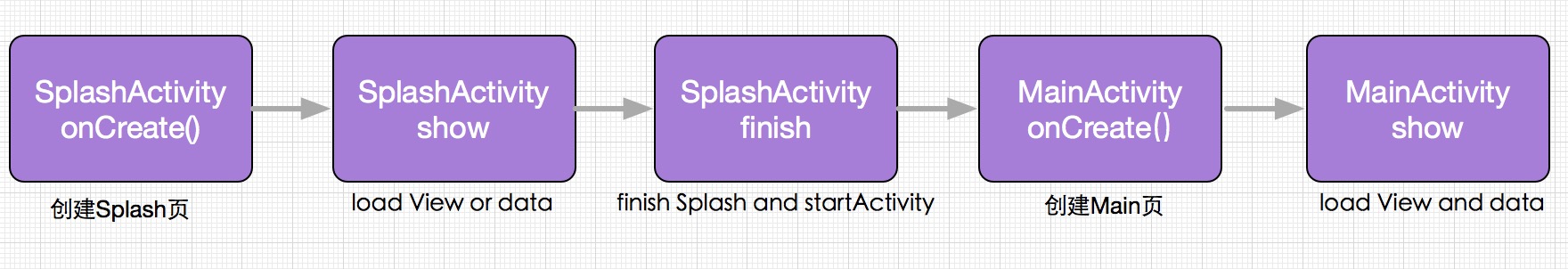

Android性能優化之Splash頁應該這樣設計 目前SplashActivity的設計 目前市場上的應用在啟動時基本上都會先啟動一個SplashActivity,作為

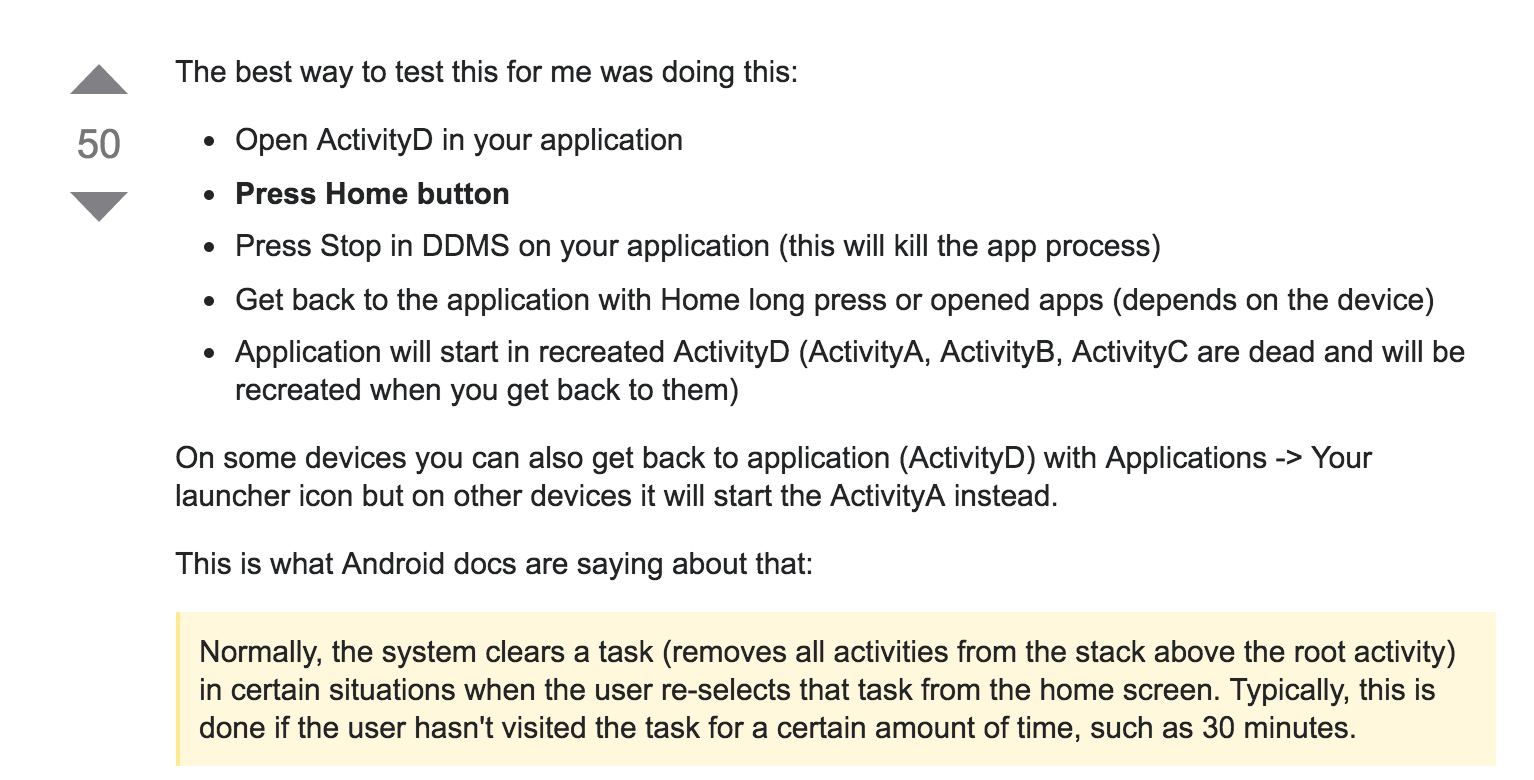

Android app被系統kill的場景,androidkill

Android app被系統kill的場景,androidkill

Android app被系統kill的場景,androidkill 何時發生 當我們的app被切到後台的時候,比如用戶按下了home鍵或者切換到了別的應用,總之是我們的

用原生VideoView進行全屏播放時的問題,videoview全屏播放

用原生VideoView進行全屏播放時的問題,videoview全屏播放

用原生VideoView進行全屏播放時的問題,videoview全屏播放之前參加了一個課程,裡面有一節講到了用視頻作為啟動界面。講師用的是自定義VideoView,重寫o