編輯:關於android開發

本文章為根據Android Mediacodec官方英文版的原創翻譯,轉載請注明出處:http://www.cnblogs.com/xiaoshubao/p/5368183.html

從API 16開始,Android提供了Mediacodec類以便開發者更加靈活的處理音視頻的編解碼,較MediaPlay提供了更加完善、豐富的操作接口,具體參見API原始地址。

因為原始文章較長且沒有找到對應較為完善的中文說明,所以自己嘗試來完成這項工作,主要目的是加深自己對該類的理解也同時希望對其他朋友有所幫助。

關於使用 Mediacodec較好的參考:

(1)DEMO1,由這位作者編寫,直接將"DecodeActivity.java"類拷貝到自己的項目中就可以使用。

(2)英文案例、講解學習參考資料。

文章開始前有幾點說明:

(1)本人也非專業的翻譯人員且能力有限,文章中部分由{.....}包含的內容翻譯可能存在問題請見諒,文章有不足之處請大家指正;

(2)本次翻譯分為兩部分,此文章為第一部分針對該類的說明內容進行翻譯,後續將提供第二部分的翻譯主要內容為屬性、方法的定義及說明,後面將考慮與MediaCodec相關的類,例如MediaExtractor是否進行翻譯;

(3)隨著項目的推進,將從項目中總結一些DMEO並發布。

翻譯開始:

MediaCodec class can be used to access low-level media codecs, i.e. encoder/decoder components. It is part of the Android low-level multimedia support infrastructure (normally used together withMediaExtractor, MediaSync, MediaMuxer, MediaCrypto, MediaDrm, Image, Surface, and AudioTrack.)

MediaCodec類可用於訪問Android底層的媒體編解碼器,例如,編碼/解碼組件。它是Android為多媒體支持提供的底層接口的一部分(通常與MediaExtractor, MediaSync, MediaMuxer, MediaCrypto, MediaDrm, Image, Surface, 以及AudioTrack一起使用)。

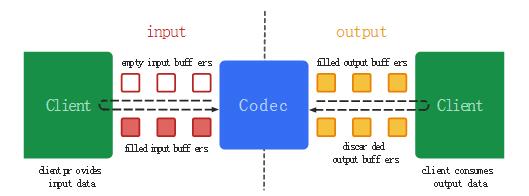

In broad terms, a codec processes input data to generate output data. It processes data asynchronously and uses a set of input and output buffers. At a simplistic level, you request (or receive) an empty input buffer, fill it up with data and send it to the codec for processing. The codec uses up the data and transforms it into one of its empty output buffers. Finally, you request (or receive) a filled output buffer, consume its contents and release it back to the codec.

從廣義上講,一個編解碼器通過處理輸入數據來產生輸出數據。它通過異步方式處理數據,並且使用了一組輸入輸出buffers。在簡單層面,你請求(或接收)到一個空的輸入buffer,向裡面填滿數據並將它傳遞給編解碼器處理。這個編解碼器將使用完這些數據並向所有空的輸出buffer中的一個填充這些數據。最終,你請求(或接受)到一個填充了數據的buffer,你可以使用其中的數據內容,並且在使用完後將其釋放回編解碼器。

數據類型

Codecs operate on three kinds of data: compressed data, raw audio data and raw video data. All three kinds of data can be processed using ByteBuffers, but you should use a Surface for raw video data to improve codec performance. Surface uses native video buffers without mapping or copying them to ByteBuffers; thus, it is much more efficient. You normally cannot access the raw video data when using a Surface, but you can use the ImageReader class to access unsecured decoded (raw) video frames. This may still be more efficient than using ByteBuffers, as some native buffers may be mapped into direct ByteBuffers. When using ByteBuffer mode, you can access raw video frames using the Image class and getInput/OutputImage(int).

編解碼器處理三種類型的數據:壓縮數據,原始音頻數據,原始視頻數據。上述三種數據都可以通過ByteBuffers進行處理,但是你需要原始視頻數據提供一個Surface來為提高編解碼體驗(譯者注:顯示視頻圖像)。Surface直接使用本地視頻數據buffers,而不是通過映射或復制的方式;因此,這樣做的顯得更加高效。通常在使用Surface的時候你不能夠直接訪問原始視頻數據,{但是你可以使用ImageReader類來訪問不可靠的解碼後(或原始)的視頻幀}。這可能仍然比使用ByteBuffers更加有效率,一些原始的buffers可能已經映射到了 direct ByteBuffers。當使用ByteBuffer模式,你可以通過使用Image類和getInput/OutputImage(int)來訪問到原始視頻數據幀。

壓縮Buffers

Input buffers (for decoders) and output buffers (for encoders) contain compressed data according to the format's type. For video types this is a single compressed video frame. For audio data this is normally a single access unit (an encoded audio segment typically containing a few milliseconds of audio as dictated by the format type), but this requirement is slightly relaxed in that a buffer may contain multiple encoded access units of audio. In either case, buffers do not start or end on arbitrary byte boundaries, but rather on frame/access unit boundaries.

輸入的buffers(用於解碼)和輸出的buffers(用於編碼)包含的壓縮數據是由媒體格式決定的。針對視頻類型是一個壓縮的單幀。針對音頻數據通常是一個單個可訪問單元(一個編碼後的音頻區段通常包含由特定格式類型決定的幾毫秒音頻數據),但這種通常也不是十分嚴格,一個buffer可能包含多個可訪問的音頻單元。在這兩種情況下,buffers通常不開始或結束於任意的字節邊界,而是結束於幀/可訪問單元的邊界。

原始音頻Buffers

Raw audio buffers contain entire frames of PCM audio data, which is one sample for each channel in channel order. Each sample is a 16-bit signed integer in native byte order.

原始的音頻數據buffers包含整個PCM音頻幀數據,這是在通道順序下每一個通道的樣本。每一個樣本就是一個 16-bit signed integer in native byte order。

原始視頻Buffers

In ByteBuffer mode video buffers are laid out according to their color format. You can get the supported color formats as an array fromgetCodecInfo().getCapabilitiesForType(…).colorFormats. Video codecs may support three kinds of color formats:

ByteBuffer模式下視頻buffers的的展現是由他們的 color format確定的。你可以通過調用 getCodecInfo().getCapabilitiesForType(…).colorFormats方法獲得其支持的顏色格式數組。視頻編解碼器可能支持三種類型的顏色格式:

COLOR_FormatSurface and it can be used with an input or output Surface.COLOR_FormatYUV420Flexible): These can be used with an input/output Surface, as well as in ByteBuffer mode, by using getInput/OutputImage(int).靈活的YUV buffers(例如COLOR_FormatYUV420Flexible):這些與輸入/輸出Surface一起使用,以及在ByteBuffer模式中,通過調用getInput/OutputImage(int)方法

MediaCodecInfo.CodecCapabilities. For color formats that are equivalent to a flexible format, you can still use getInput/OutputImage(int).All video codecs support flexible YUV 4:2:0 buffers since LOLLIPOP_MR1.

從LOLLIPOP_MR1 API起所有視頻編解碼器支持靈活的YUV 4:2:0 buffers.

狀態

During its life a codec conceptually exists in one of three states: Stopped, Executing or Released. The Stopped collective state is actually the conglomeration of three states: Uninitialized, Configured and Error, whereas the Executing state conceptually progresses through three sub-states: Flushed, Running and End-of-Stream.

從概念上講在整個生命周期中編解碼器對象存在於三種狀態之一:Stopped, Executing 或 Released。整體的Stoped狀態實際是由三種狀態的集成:Uninitialized, Configured以及 Error,而從概念上將Executing狀態的執行時通過三個子狀態:Flushed, Running 以及 End-of-Stream。

format.setString(MediaFormat.KEY_FRAME_RATE, null) to clear any existing frame rate setting in the format.

注意,在API LOLLIPOP上,傳遞給MediaCodecList.findDecoder/EncoderForFormat的格式必須不能包含幀率。通過調用format.setString(MediaFormat.KEY_FRAME_RATE, null)方法清除任何存在於當前格式中的幀率。

You can also create the preferred codec for a specific MIME type using createDecoder/EncoderByType(String). This, however, cannot be used to inject features, and may create a codec that cannot handle the specific desired media format.

你也可以通過調用createDecoder/EncoderByType(String)方法創建一個首選的MIME類型的編解碼器。然而,不能夠用於注入特性,以及創建了一個不能處理期望的特定媒體格式的編解碼器。

創建secure 解碼器

On versions KITKAT_WATCH and earlier, secure codecs might not be listed in MediaCodecList, but may still be available on the system. Secure codecs that exist can be instantiated by name only, by appending ".secure" to the name of a regular codec (the name of all secure codecs must end in ".secure".) createByCodecName(String) will throw an IOException if the codec is not present on the system.

在版本API KITKAT_WATCH 及以前,secure 編解碼器在MediaCodecList中沒有列出來,但是仍然可以在這個系統中使用。secure 編解碼器的存在只能夠通過名字實例化,通過在通常的編解碼器添加".secure"(所有的secure 解碼器名稱必須以".secure"結尾),如果系統上不存在指定的編解碼器則createByCodecName(String)方法將拋出一個IOException 異常。

From LOLLIPOP onwards, you should use the FEATURE_SecurePlayback feature in the media format to create a secure decoder.

從API LOLLIPOP版本及以後,你可以使用FEATURE_SecurePlayback屬性在媒體格式中創建一個secure 編解碼器。

初始化

After creating the codec, you can set a callback using setCallback if you want to process data asynchronously. Then, configure the codec using the specific media format. This is when you can specify the output Surface for video producers – codecs that generate raw video data (e.g. video decoders). This is also when you can set the decryption parameters for secure codecs (seeMediaCrypto). Finally, since some codecs can operate in multiple modes, you must specify whether you want it to work as a decoder or an encoder.

在創建了編解碼器後,如果你想異步地處理數據那麼可以通過setCallback方法設置一個回調方法。然後,通過指定的媒體格式configure 這個編解碼器。這段時間你可以為視頻原始數據產生者(例如視頻解碼器)指定輸出Surface。此時你也可以為secure 編解碼器設置解碼參數(詳見MediaCrypto) 。最後,因為有些編解碼器可以操作於多種模式,你必須指定是想讓他作為一個解碼器或編碼器運行。

Since LOLLIPOP, you can query the resulting input and output format in the Configured state. You can use this to verify the resulting configuration, e.g. color formats, before starting the codec.

從API LOLLIPOP起,你可以在Configured 狀態查詢輸入和輸出格式的結果。在開始編解碼前你可以通過這個結果來驗證配置的結果,例如,顏色格式。

If you want to process raw input video buffers natively with a video consumer – a codec that processes raw video input, such as a video encoder – create a destination Surface for your input data using createInputSurface() after configuration. Alternately, set up the codec to use a previously created persistent input surface by calling setInputSurface(Surface).

如果你想通過視頻處理者處理原始輸入視頻buffers,一個處理原始視頻輸入的編解碼器,例如視頻編碼器,在配置完成後通過調用createInputSurface()方法為你的輸入數據創建一個目標Surface。通過先前創建的persistent input surface調用setInputSurface(Surface)配置這個編解碼器。

Codec-specific數據

Some formats, notably AAC audio and MPEG4, H.264 and H.265 video formats require the actual data to be prefixed by a number of buffers containing setup data, or codec specific data. When processing such compressed formats, this data must be submitted to the codec after start() and before any frame data. Such data must be marked using the flag BUFFER_FLAG_CODEC_CONFIGin a call to queueInputBuffer.

有些格式,特別是ACC音頻和MPEG4,H.264和H.265視頻格式要求以包含特定數量的構建數據buffers或者codec-specific數據為前綴的實際數據。當處理這樣的壓縮格式時,這些數據必須在start()方法後和任何幀數據之前提交給編解碼器。這些數據必須在調用queueInputBuffer方法時用BUFFER_FLAG_CODEC_CONFIG標記。

Codec-specific data can also be included in the format passed to configure in ByteBuffer entries with keys "csd-0", "csd-1", etc. These keys are always included in the track MediaFormat obtained from the MediaExtractor. Codec-specific data in the format is automatically submitted to the codec upon start(); you MUST NOT submit this data explicitly. If the format did not contain codec specific data, you can choose to submit it using the specified number of buffers in the correct order, according to the format requirements. Alternately, you can concatenate all codec-specific data and submit it as a single codec-config buffer.

Codec-specific數據也可以被包含在傳遞給configure的ByteBuffer的格式裡面,包含的keys是 "csd-0", "csd-1"等。這些keys通常包含在通過MediaExtractor獲得的軌道MediaFormat中。這個格式中的Codec-specific數據將在接近start()方法時自動提交給編解碼器;你不能顯示的提交這些數據。如果這個格式不包含編解碼器指定的數據,你也可以選擇在這個編解碼器中以這個格式所要求的並以正確的順序傳遞特定數量的buffers來提交這些數據。還有,你也可以連接所有的codec-specific數據並作為一個單獨的codec-config buffer提交。

Android uses the following codec-specific data buffers. These are also required to be set in the track format for proper MediaMuxer track configuration. Each parameter set and the codec-specific-data sections marked with (*) must start with a start code of "\x00\x00\x00\x01".

Android 使用以下codec-specific數據buffers。{這些也被要求在軌道配置的格式軌道屬性MediaMuxer中進行配置}。所有設置的參數以及被標記為(*)的codec-specific-data必須以 "\x00\x00\x00\x01"字符開頭。

被一個buffer-ID引用的輸入和輸出buffers。當成功調用start()方法後客戶端將“擁有”輸入和輸出buffers。在同步模式下,通過調用dequeueInput/OutputBuffer(…) 從編解碼器獲得(具有所有權的)一個輸入或輸出buffer。在異步模式下,你可以通過MediaCodec.Callback.onInput/OutputBufferAvailable(…)的回調方法自動地獲得可用的buffers.

Upon obtaining an input buffer, fill it with data and submit it to the codec using queueInputBuffer – or queueSecureInputBuffer if using decryption. Do not submit multiple input buffers with the same timestamp (unless it is codec-specific data marked as such).

在獲得一個輸入buffer,在使用解密方式下通過queueInputBuffer或queueSecureInputBuffer向編解碼器填充相應數據。不要提交多個具有相同時間戳的輸入bufers(除非他的codec-specific 數據時那樣標記的)。

The codec in turn will return a read-only output buffer via the onOutputBufferAvailable callback in asynchronous mode, or in response to a dequeuOutputBuffer call in synchronous mode. After the output buffer has been processed, call one of the releaseOutputBuffer methods to return the buffer to the codec.

在異步模式下,編解碼器將通過onOutputBufferAvailable的回調返回一個只讀的輸出buffer,或者在同步模式下響應dequeuOutputBuffer的調用。在輸出buffer被處理後,調用releaseOutputBuffer方法中其中一個將這個buffer返回給編解碼器。

While you are not required to resubmit/release buffers immediately to the codec, holding onto input and/or output buffers may stall the codec, and this behavior is device dependent. Specifically, it is possible that a codec may hold off on generating output buffers until all outstanding buffers have been released/resubmitted. Therefore, try to hold onto to available buffers as little as possible.

你不需要立即向編解碼器重新提交或釋放buffers,{獲得的輸入或輸出buffers可能失去編解碼器},當然這些行為依賴於設備情況。具體地說,編解碼器可能推遲產生輸出buffers直到輸出的buffers被釋放或重新提交。因此,盡可能保存可用的buffers。

Depending on the API version, you can process data in three ways:

根據API版本情況,你有三種方式處理相關數據:

1 MediaCodec codec = MediaCodec.createByCodecName(name);

2 MediaFormat mOutputFormat; // member variable

3 codec.setCallback(new MediaCodec.Callback() {

4 @Override

5 void onInputBufferAvailable(MediaCodec mc, int inputBufferId) {

6 ByteBuffer inputBuffer = codec.getInputBuffer(inputBufferId);

7 // fill inputBuffer with valid data

8 …

9 codec.queueInputBuffer(inputBufferId, …);

10 }

11

12 @Override

13 void onOutputBufferAvailable(MediaCodec mc, int outputBufferId, …) {

14 ByteBuffer outputBuffer = codec.getOutputBuffer(outputBufferId);

15 MediaFormat bufferFormat = codec.getOutputFormat(outputBufferId); // option A

16 // bufferFormat is equivalent to mOutputFormat

17 // outputBuffer is ready to be processed or rendered.

18 …

19 codec.releaseOutputBuffer(outputBufferId, …);

20 }

21

22 @Override

23 void onOutputFormatChanged(MediaCodec mc, MediaFormat format) {

24 // Subsequent data will conform to new format.

25 // Can ignore if using getOutputFormat(outputBufferId)

26 mOutputFormat = format; // option B

27 }

28

29 @Override

30 void onError(…) {

31 …

32 }

33 });

34 codec.configure(format, …);

35 mOutputFormat = codec.getOutputFormat(); // option B

36 codec.start();

37 // wait for processing to complete

38 codec.stop();

39 codec.release();

View Code

同步處理使用的Buffers

Since LOLLIPOP, you should retrieve input and output buffers using getInput/OutputBuffer(int) and/or getInput/OutputImage(int) even when using the codec in synchronous mode. This allows certain optimizations by the framework, e.g. when processing dynamic content. This optimization is disabled if you call getInput/OutputBuffers().

從LOLLIPOP API版本開始,在同步模式下使用編解碼器你應該通過getInput/OutputBuffer(int) 和/或 getInput/OutputImage(int) 檢索輸入和輸出buffers。這允許通過框架進行某些優化,例如,在處理動態內容過程中。如果你調用getInput/OutputBuffers()方法這種優化是不可用的。

Note: do not mix the methods of using buffers and buffer arrays at the same time. Specifically, only call getInput/OutputBuffers directly after start() or after having dequeued an output buffer ID with the value of INFO_OUTPUT_FORMAT_CHANGED.

注意,不要在同時使用buffers和buffer時產生混淆。特別地,僅僅在調用start()方法後或取出一個值為 INFO_OUTPUT_FORMAT_CHANGED的輸出buffer ID後你才可以直接調用getInput/OutputBuffers方法。

MediaCodec is typically used like this in synchronous mode:

下面是MediaCodec在同步模式下的典型應用:

同步處理使用的Buffer 數組(已棄用)

In versions KITKAT_WATCH and before, the set of input and output buffers are represented by the ByteBuffer[] arrays. After a successful call to start(), retrieve the buffer arrays usinggetInput/OutputBuffers(). Use the buffer ID-s as indices into these arrays (when non-negative), as demonstrated in the sample below. Note that there is no inherent correlation between the size of the arrays and the number of input and output buffers used by the system, although the array size provides an upper bound.

在KITKAT_WATCH 及以前的API中,這一組輸入或輸出buffers使用ByteBuffer[]數組表示的。在成功調用了start()方法後,通過調用 getInput/OutputBuffers()方法檢索buffer數組。在這些數組中(非負數)使用buffer的ID-s作為索引,如下面的演示示例中,請注意被系統使用的數組大小和輸入和輸出buffers數量之間是沒有固定關系的,盡管這個數組提供了上限邊界。

處理End-of-stream

When you reach the end of the input data, you must signal it to the codec by specifying the BUFFER_FLAG_END_OF_STREAM flag in the call to queueInputBuffer. You can do this on the last valid input buffer, or by submitting an additional empty input buffer with the end-of-stream flag set. If using an empty buffer, the timestamp will be ignored.

當到達輸入數據的結尾,你必須向這個編解碼器在調用queueInputBuffer方法中指定BUFFER_FLAG_END_OF_STREAM 來標記輸入數據。你可以在最後一個合法的輸入buffer上做這些操作,或者提交一個以 end-of-stream 標記的額外的空的輸入buffer。如果使用一個空的buffer,它的時間戳將被忽略。

The codec will continue to return output buffers until it eventually signals the end of the output stream by specifying the same end-of-stream flag in the MediaCodec.BufferInfo set indequeueOutputBuffer or returned via onOutputBufferAvailable. This can be set on the last valid output buffer, or on an empty buffer after the last valid output buffer. The timestamp of such empty buffer should be ignored.

編解碼器將繼續返回輸出buffers,直到在這個設置在indequeueOutputBuffer 裡的 MediaCodec.BufferInfo 中被同樣標記為 end-of-stream 的輸出流結束的時候或者通過onOutputBufferAvailable返回。這些可以被設置在最後一個合法的輸出buffer上,或者在最後一個合法的buffer後的一個空buffer。那樣的空buffer的時間戳將被忽略。

Do not submit additional input buffers after signaling the end of the input stream, unless the codec has been flushed, or stopped and restarted.

不要在輸入流被標記為結束後提交額外的輸入buffers,除非這個編解碼器被flushed,或者stopped 和restarted。

使用輸出Surface

The data processing is nearly identical to the ByteBuffer mode when using an output Surface; however, the output buffers will not be accessible, and are represented as null values. E.g.getOutputBuffer/Image(int) will return null and getOutputBuffers() will return an array containing only null-s.

在使用一個輸出Surface時,其數據處理基本上與處理ByteBuffer模式相同。然而,這個輸出buffers將不可訪問,並且被描述為null值。例如,調用getOutputBuffer/Image(int)將返回null,以及調用getOutputBuffers()將返回一個只包含null-s的數組。

When using an output Surface, you can select whether or not to render each output buffer on the surface. You have three choices:

當使用輸出Surface,你可以選擇在surface上是否渲染每一個輸出buffer。你有三種選擇:

releaseOutputBuffer(bufferId, false).不要渲染這個buffer:通過調用releaseOutputBuffer(bufferId, false).

releaseOutputBuffer(bufferId, true).使用默認的時間戳渲染這個buffer:調用eleaseOutputBuffer(bufferId, true).

releaseOutputBuffer(bufferId, timestamp).使用指定的時間戳渲染這個buffer:調用 releaseOutputBuffer(bufferId, timestamp).

Since M, the default timestamp is the presentation timestamp of the buffer (converted to nanoseconds). It was not defined prior to that.

從M API版本開始,默認的時間戳是presentation timestamp這個buffer的時間戳的(轉換為納秒)。在此前的版本中這是沒有被定義的。

Also since M, you can change the output Surface dynamically using setOutputSurface.

仍然從M 版本API開始,你可以通過使用setOutputSurface動態改變這個輸出Surface。

使用輸入Surface

When using an input Surface, there are no accessible input buffers, as buffers are automatically passed from the input surface to the codec. Calling dequeueInputBuffer will throw anIllegalStateException, and getInputBuffers() returns a bogus ByteBuffer[] array that MUST NOT be written into.

當使用輸入Surface時,將沒有可訪問的輸入buffers,因為這些buffers將會從輸入surface自動地向編解碼器傳輸。調用dequeueInputBuffer時將拋出一個IllegalStateException,調用getInputBuffers()將要返回一個不能寫入的假的ByteBUffer[]數組。

Call signalEndOfInputStream() to signal end-of-stream. The input surface will stop submitting data to the codec immediately after this call.

調用signalEndOfInputStream() 方法標記end-of-stream。調用這個方法後,輸入surface將會立即停止向編解碼器提交數據。

Seeking及adaptive playback 放的支持

Video decoders (and in general codecs that consume compressed video data) behave differently regarding seek and format change whether or not they support and are configured for adaptive playback. You can check if a decoder supports adaptive playback via CodecCapabilities.isFeatureSupported(String). Adaptive playback support for video decoders is only activated if you configure the codec to decode onto a Surface.

{視頻解碼器(通常是消費壓縮視頻數據的編解碼器)關於seek和格式變化的行為是不同的,不管他們是否支持以及被配置為adaptive playback}。你可以通過調用CodecCapabilities.isFeatureSupported(String)方法來檢查解碼器是否支持adaptive playback 。只有在編解碼器被配置在Surface上解碼時支持Adaptive playback播放的解碼器才被激活。

流邊界及關鍵幀

It is important that the input data after start() or flush() starts at a suitable stream boundary: the first frame must a key frame. A key frame can be decoded completely on its own (for most codecs this means an I-frame), and no frames that are to be displayed after a key frame refer to frames before the key frame.

在調用start()或flush()方法後輸入數據以合適的流邊界開始是非常重要的:其第一幀必須是關鍵幀。一個關鍵幀能夠通過其自身完全解碼(針對大多數編解碼器它是一個I幀),沒有幀能夠在關鍵幀之前或之後顯示。

The following table summarizes suitable key frames for various video formats.

下面的表格針對不同的格式總結了合適的關鍵幀。

在靠近調用fulush方法的地方立即被取消所有輸出buffers,你可能希望在調用flush方法前等待這些buffers首先被標記為end-of-stream。在調用 fush 方法後傳入的輸入數據開始於一個合適的流邊界或關鍵幀是非常重要的。

Note: the format of the data submitted after a flush must not change; flush() does not support format discontinuities; for that, a full stop() - configure(…) - start() cycle is necessary.

注意:調用fush方法後傳入的數據的格式是不能夠改變的;flush()方法不支持不連續的格式;因此,一個完整的stop()-configure(...)-start()的生命循環是必要的。

Also note: if you flush the codec too soon after start() – generally, before the first output buffer or output format change is received – you will need to resubmit the codec-specific-data to the codec. See the codec-specific-data section for more info.

同時注意:如果你在太靠近調用start()方法後刷新編解碼器,通常,在收到第一個輸出buffer或輸出format變化前你需要向這個編解碼器再次提交codec-specific-data。具體查看codec-specific-data部分以獲得更多信息。

針對支持並配置為adaptive playback的編解碼器

In order to start decoding data that is not adjacent to previously submitted data (i.e. after a seek) it is not necessary to flush the decoder; however, input data after the discontinuity must start at a suitable stream boundary/key frame.

為了開始解碼與先前提交的數據(例如,在執行了seek後)不相鄰的數據,你不是一定要刷新解碼器;然而,在不連續的數據之後傳入的數據必須開始於一個合適的流邊界或關鍵幀。

For some video formats - namely H.264, H.265, VP8 and VP9 - it is also possible to change the picture size or configuration mid-stream. To do this you must package the entire new codec-specific configuration data together with the key frame into a single buffer (including any start codes), and submit it as a regular input buffer.

針對一些視頻格式-也就是H.264、H.265、VP8和VP9,這些格式也可以修改圖片大小或者配置mid-stream。為了做到這些你必須將整個新codec-specific配置數據與關鍵幀一起打包到一個單獨的buffer中(包括所有的開始數據),並將它作為一個正常的輸入數據提交。

You will receive an INFO_OUTPUT_FORMAT_CHANGED return value from dequeueOutputBuffer or a onOutputFormatChanged callback just after the picture-size change takes place and before any frames with the new size have been returned.

你可以從picture-size被改變後以及任意具有新大小幀返回之前從dequeueOutputBuffer或一個onOutputFormatChanged回調中得到 INFO_OUTPUT_FORMAT_CHANGED的返回值。

Note: just as the case for codec-specific data, be careful when calling flush() shortly after you have changed the picture size. If you have not received confirmation of the picture size change, you will need to repeat the request for the new picture size.

注意:在使用codec-specific數據案例中,在你修改圖片大小後立即調用fush()方法時需要非常小心。如果你沒有接收到圖片大小改變的配置信息,你需要重試修改圖片大小的請求。

錯誤處理

The factory methods createByCodecName and createDecoder/EncoderByType throw IOException on failure which you must catch or declare to pass up. MediaCodec methods throwIllegalStateException when the method is called from a codec state that does not allow it; this is typically due to incorrect application API usage. Methods involving secure buffers may throwMediaCodec.CryptoException, which has further error information obtainable from getErrorCode().

工廠方法createByCodecName以及 createDecoder/EncoderByType將會在操作失敗時拋出一個IOException,你必須捕獲或聲明為上拋這個異常。MediaCodec的方法將會在調用了編解碼器不被允許的狀態時拋出IllegalStateException異常;這種情況一般是由於API接口的非正常調用引起的。涉及secure buffers的方法可能會拋出一個MediaCodec.CryptoException異常,更多的異常信息你可以從調用getErrorCode()方法獲得。

Internal codec errors result in a MediaCodec.CodecException, which may be due to media content corruption, hardware failure, resource exhaustion, and so forth, even when the application is correctly using the API. The recommended action when receiving a CodecException can be determined by calling isRecoverable() and isTransient():

編解碼器的內部錯誤是在MediaCodec.CodecException中體現的,即便應用正確使用API也會由於幾種原因導致失敗,例如:媒體內容“髒數據”、硬件錯誤、資源不足等等。當接收到一個CodecException時的首選方法可以調用isRecoverable() 和 isTransient()這兩個方法進行定義。

isRecoverable() returns true, then call stop(), configure(…), and start() to recover.isTransient() returns true, then resources are temporarily unavailable and the method may be retried at a later time.isRecoverable() and isTransient() return false, then the CodecException is fatal and the codec must be reset or released.Both isRecoverable() and isTransient() do not return true at the same time.

isRecoverable() 和 isTransient()方法不可能同時都返回true。

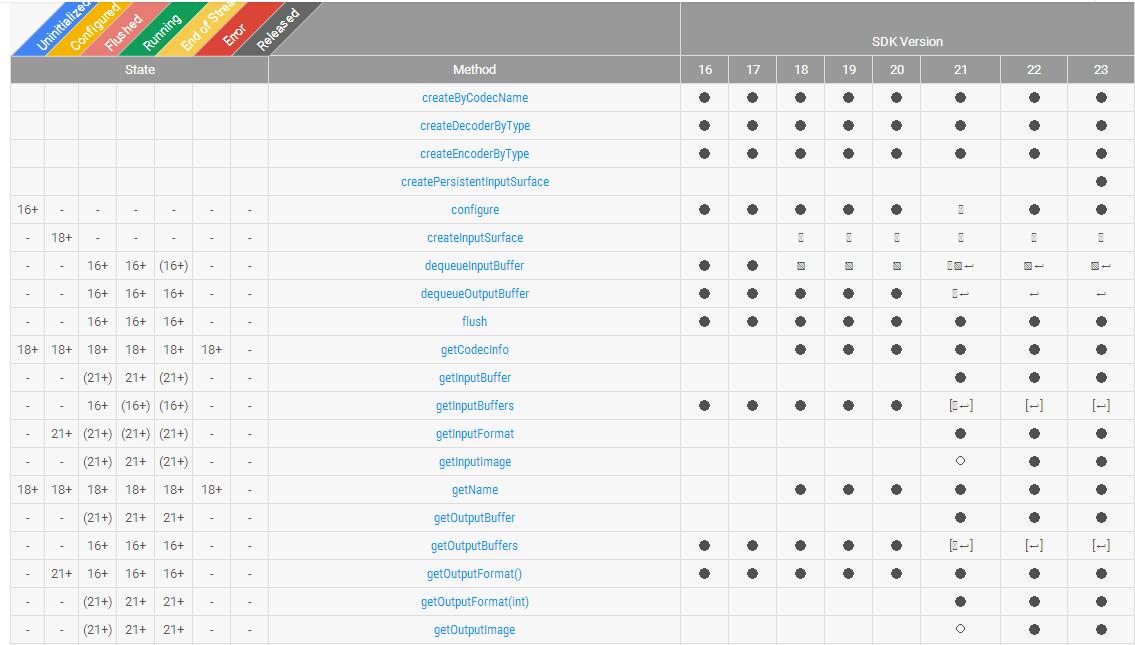

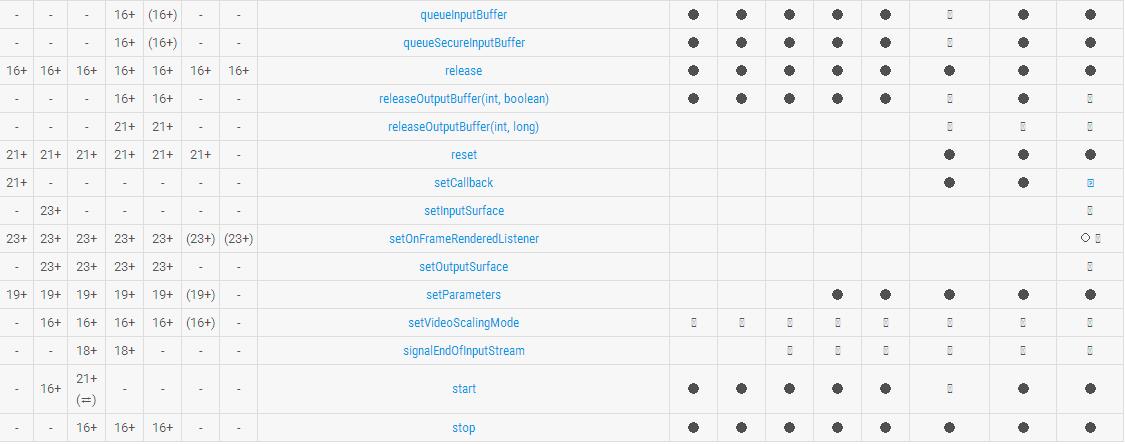

合法API接口及歷史API

This sections summarizes the valid API calls in each state and the API history of the MediaCodec class. For API version numbers, see Build.VERSION_CODES.

下面的表格總結了MediaCodec中合法的API以及API歷史版本。更多的API版本編號詳見Build.VERSION_CODES。

活動的生命周期(三):實例上機課,生命周期上機

活動的生命周期(三):實例上機課,生命周期上機

活動的生命周期(三):實例上機課,生命周期上機 讓我們再來回顧一下上節課中分享的7個生命周期;分別是:onCreate()、onSar

TabLayout和ViewPager簡單實現頁卡的滑動,tablayoutviewpager

TabLayout和ViewPager簡單實現頁卡的滑動,tablayoutviewpager

TabLayout和ViewPager簡單實現頁卡的滑動,tablayoutviewpager首先需要在當前的module中的build Gradle的 dependen

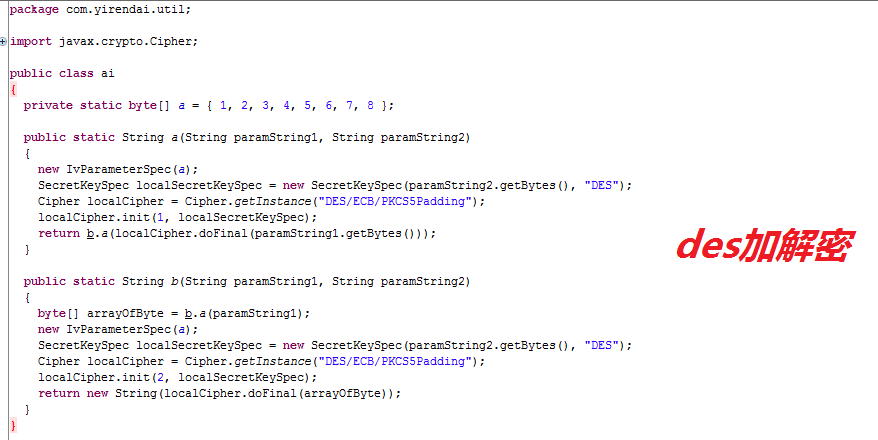

Android安全開發之淺談密鑰硬編碼,android淺談密鑰

Android安全開發之淺談密鑰硬編碼,android淺談密鑰

Android安全開發之淺談密鑰硬編碼,android淺談密鑰Android安全開發之淺談密鑰硬編碼 作者:伊樵、呆狐@阿裡聚安全 1 簡介 在阿裡聚安全的

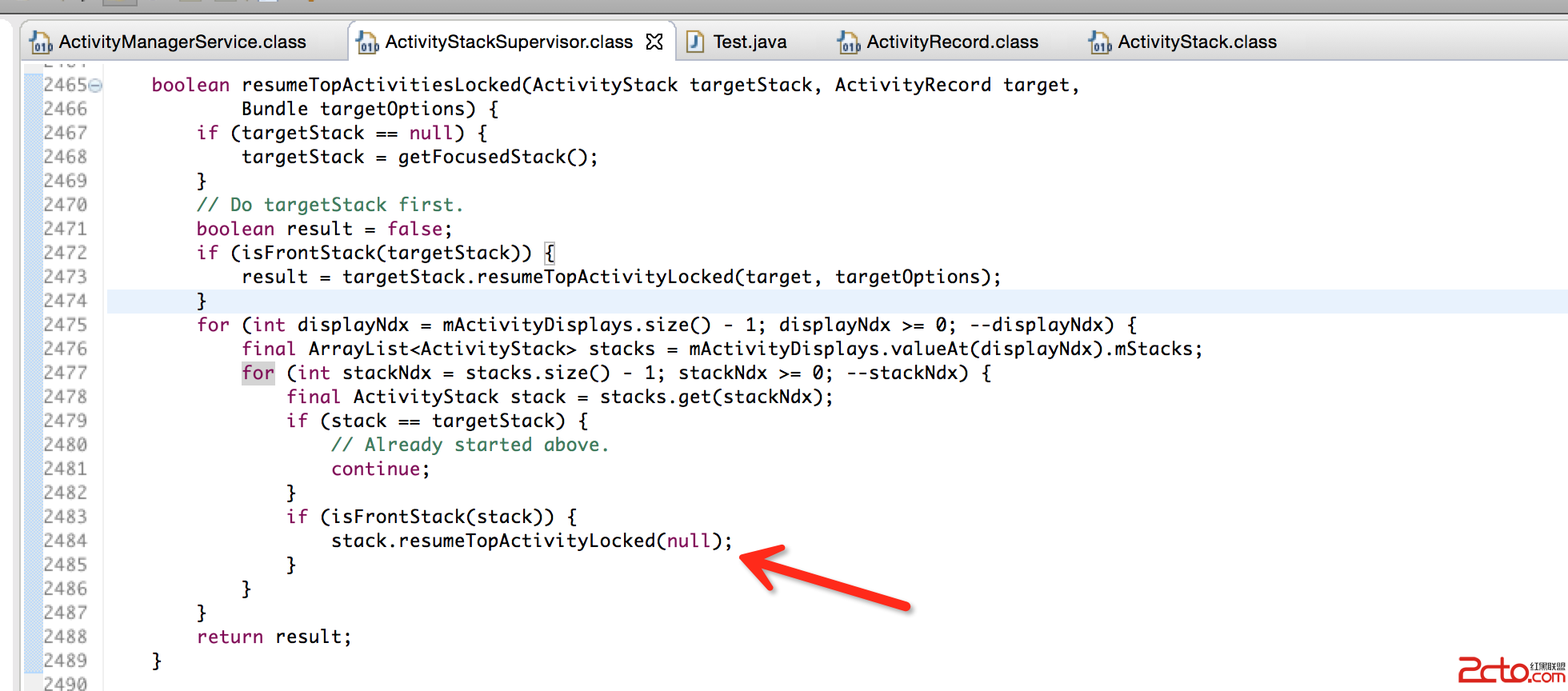

android插件開發-就是你了!啟動吧!插件的activity(二)

android插件開發-就是你了!啟動吧!插件的activity(二)

android插件開發-就是你了!啟動吧!插件的activity(二) 這篇博客是上篇的延續,在閱讀之前先閱讀第一部分:第一部分 我們在啟動插件的activity時

android Unable toexecute dex: method ID not in [0, 0xffff]: 65536問題

android Unable toexecute dex: method ID not in [0, 0xffff]: 65536問題

android Unable toexecute dex: method